Stereoscopic rendering: Difference between revisions

Xinreality (talk | contribs) No edit summary |

Xinreality (talk | contribs) No edit summary |

||

| (7 intermediate revisions by the same user not shown) | |||

| Line 17: | Line 17: | ||

| users = 171 million (2024) | | users = 171 million (2024) | ||

}} | }} | ||

[[File:stereoscopic rendering1.jpg|300px|right]] | |||

[[File:stereoscopic rendering2.jpg|300px|right]] | |||

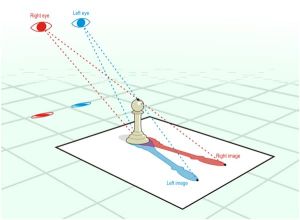

'''Stereoscopic rendering''' is the foundational [[computer graphics]] technique that creates the perception of three-dimensional depth in [[virtual reality]] (VR) and [[augmented reality]] (AR) systems by generating two slightly different images from distinct viewpoints corresponding to the left and right eyes.<ref name="arm2021">ARM Software. "Introduction to Stereo Rendering - VR SDK for Android." ARM Developer Documentation, 2021. https://arm-software.github.io/vr-sdk-for-android/IntroductionToStereoRendering.html</ref> This technique exploits [[binocular disparity]], the horizontal displacement between corresponding points in the two images, enabling the [[visual cortex]] to reconstruct depth information through [[stereopsis]], the same process human eyes use to perceive the real world.<ref name="numberanalytics2024">Number Analytics. "Stereoscopy in VR: A Comprehensive Guide." 2024. https://www.numberanalytics.com/blog/ultimate-guide-stereoscopy-vr-ar-development</ref> By delivering two offset images (one per eye) that the brain combines into a single scene, stereoscopic rendering produces an illusion of depth that mimics natural [[binocular vision]].<ref name="drawandcode">Draw & Code. "What Is Stereoscopic VR Technology." January 23, 2024. https://drawandcode.com/learning-zone/what-is-stereoscopic-vr-technology/</ref> | |||

'''Stereoscopic rendering''' is the foundational [[computer graphics]] technique that creates the perception of three-dimensional depth in [[virtual reality]] (VR) and [[augmented reality]] (AR) systems by generating two slightly different images from distinct viewpoints corresponding to the left and right eyes.<ref name="arm2021">ARM Software. "Introduction to Stereo Rendering - VR SDK for Android." ARM Developer Documentation, 2021. https://arm-software.github.io/vr-sdk-for-android/IntroductionToStereoRendering.html</ref> This technique exploits [[binocular disparity]] | |||

The approach doubles computational requirements compared to traditional rendering but delivers the immersive depth perception that defines modern VR experiences, powering a $15.9 billion industry serving 171 million users worldwide as of 2024.<ref name="mordor2024">Mordor Intelligence. "Virtual Reality (VR) Market Size, Report, Share & Growth Trends 2025-2030." 2024. https://www.mordorintelligence.com/industry-reports/virtual-reality-market</ref> Unlike monoscopic imagery (showing the same image to both eyes), stereoscopic rendering presents each eye with a slightly different perspective, closely matching how humans view the real world and thereby greatly enhancing the sense of presence and realism in VR/AR.<ref name="borisfx2024">Boris FX. "Monoscopic vs Stereoscopic 360 VR: Key Differences." 2024. https://borisfx.com/blog/monoscopic-vs-stereoscopic-360-vr-key-differences/</ref> | The approach doubles computational requirements compared to traditional rendering but delivers the immersive depth perception that defines modern VR experiences, powering a $15.9 billion industry serving 171 million users worldwide as of 2024.<ref name="mordor2024">Mordor Intelligence. "Virtual Reality (VR) Market Size, Report, Share & Growth Trends 2025-2030." 2024. https://www.mordorintelligence.com/industry-reports/virtual-reality-market</ref> Unlike monoscopic imagery (showing the same image to both eyes), stereoscopic rendering presents each eye with a slightly different perspective, closely matching how humans view the real world and thereby greatly enhancing the sense of presence and realism in VR/AR.<ref name="borisfx2024">Boris FX. "Monoscopic vs Stereoscopic 360 VR: Key Differences." 2024. https://borisfx.com/blog/monoscopic-vs-stereoscopic-360-vr-key-differences/</ref> | ||

| Line 222: | Line 78: | ||

| 1991 || Virtuality VR arcades || Real-time stereoscopic multiplayer VR | | 1991 || Virtuality VR arcades || Real-time stereoscopic multiplayer VR | ||

|- | |- | ||

| 1995 || Nintendo Virtual Boy || Portable stereoscopic gaming console | | 1995 || [[Nintendo Virtual Boy]] || Portable stereoscopic gaming console | ||

|- | |- | ||

| 2010 || Oculus Rift prototype || Modern stereoscopic HMD revival | | 2010 || [[Oculus Rift]] prototype || Modern stereoscopic HMD revival | ||

|- | |- | ||

| 2016 || HTC Vive/Oculus Rift CV1 release || Consumer room-scale stereoscopic VR | | 2016 || [[HTC Vive]]/[[Oculus Rift CV1]] release || Consumer room-scale stereoscopic VR | ||

|- | |- | ||

| 2023 || Apple Vision Pro || High-resolution stereoscopic mixed reality (70 pixels per degree) | | 2023 || [[Apple Vision Pro]] || High-resolution stereoscopic mixed reality (70 pixels per degree) | ||

|} | |} | ||

| Line 237: | Line 93: | ||

=== Computer Graphics Era === | === Computer Graphics Era === | ||

Computer-generated stereoscopy began with [[Ivan Sutherland]]'s 1968 head-mounted display at [[Harvard University]], nicknamed the "[[Sword of Damocles (virtual reality)|Sword of Damocles]]" due to its unwieldy overhead suspension system. This wireframe graphics prototype established the technical | Computer-generated stereoscopy began with [[Ivan Sutherland]]'s 1968 head-mounted display at [[Harvard University]], nicknamed the "[[Sword of Damocles (virtual reality)|Sword of Damocles]]" due to its unwieldy overhead suspension system. This wireframe graphics prototype established the technical template, [[head tracking]], stereoscopic displays, and real-time rendering, that would define VR development for decades.<ref name="nextgen2024">Nextgeninvent. "Virtual Reality's Evolution From Science Fiction to Mainstream Technology." 2024. https://nextgeninvent.com/blogs/the-evolution-of-virtual-reality/</ref> | ||

The gaming industry drove early consumer adoption with [[Sega]]'s SubRoc-3D in 1982, the world's first commercial stereoscopic video game featuring an active shutter 3D system jointly developed with [[Matsushita Electric Industrial Co.|Matsushita]].<ref name="siggraph2024">ACM SIGGRAPH. "Remember Stereo 3D on the PC? Have You Ever Wondered What Happened to It?" 2024. https://blog.siggraph.org/2024/10/stereo-3d-pc-history-decline.html/</ref> | The gaming industry drove early consumer adoption with [[Sega]]'s SubRoc-3D in 1982, the world's first commercial stereoscopic video game featuring an active shutter 3D system jointly developed with [[Matsushita Electric Industrial Co.|Matsushita]].<ref name="siggraph2024">ACM SIGGRAPH. "Remember Stereo 3D on the PC? Have You Ever Wondered What Happened to It?" 2024. https://blog.siggraph.org/2024/10/stereo-3d-pc-history-decline.html/</ref> | ||

| Line 243: | Line 99: | ||

=== Modern VR Revolution === | === Modern VR Revolution === | ||

The modern VR revolution began with [[Palmer Luckey]]'s 2012 [[Oculus Rift]] [[Kickstarter]] campaign, which raised $2.5 million. [[Facebook]]'s $2 billion acquisition of [[Oculus VR|Oculus]] in 2014 validated the market potential. The watershed 2016 launches of the [[Oculus Rift#Consumer version|Oculus Rift CV1]] and [[HTC Vive]] | The modern VR revolution began with [[Palmer Luckey]]'s 2012 [[Oculus Rift]] [[Kickstarter]] campaign, which raised $2.5 million. [[Facebook]]'s $2 billion acquisition of [[Oculus VR|Oculus]] in 2014 validated the market potential. The watershed 2016 launches of the [[Oculus Rift#Consumer version|Oculus Rift CV1]] and [[HTC Vive]], offering 2160×1200 combined resolution at 90Hz with [[room-scale tracking]], established the technical baseline for modern VR.<ref name="cavendish2024">Cavendishprofessionals. "The Evolution of VR and AR in Gaming: A Historical Perspective." 2024. https://www.cavendishprofessionals.com/the-evolution-of-vr-and-ar-in-gaming-a-historical-perspective/</ref> | ||

== Mathematical Foundations == | == Mathematical Foundations == | ||

| Line 281: | Line 137: | ||

=== Multi-Pass Rendering === | === Multi-Pass Rendering === | ||

Traditional multi-pass rendering takes the straightforward approach of rendering the complete scene twice sequentially, once per eye. Each eye uses separate camera parameters, performing independent [[draw call]]s, culling operations, and shader executions. While conceptually simple and compatible with all rendering pipelines, this approach imposes nearly 2× computational | Traditional multi-pass rendering takes the straightforward approach of rendering the complete scene twice sequentially, once per eye. Each eye uses separate camera parameters, performing independent [[draw call]]s, culling operations, and shader executions. While conceptually simple and compatible with all rendering pipelines, this approach imposes nearly 2× computational cost, doubling [[CPU]] overhead from draw call submission, duplicating geometry processing on the [[GPU]], and requiring full iteration through all rendering stages twice.<ref name="unity2024">Unity. "How to maximize AR and VR performance with advanced stereo rendering." Unity Blog, 2024. https://blog.unity.com/technology/how-to-maximize-ar-and-vr-performance-with-advanced-stereo-rendering</ref> | ||

=== Single-Pass Stereo Rendering === | === Single-Pass Stereo Rendering === | ||

Single-pass stereo rendering optimizes by traversing the scene graph once while rendering to both eye buffers.<ref name="nvidia2018">NVIDIA Developer. "Turing Multi-View Rendering in VRWorks." NVIDIA Technical Blog, 2018. https://developer.nvidia.com/blog/turing-multi-view-rendering-vrworks/</ref> Single-pass instanced approach uses GPU instancing with instance count of 2, where the [[vertex shader]] outputs positions for both views simultaneously. Example shader code: | Single-pass stereo rendering optimizes by traversing the scene graph once while rendering to both eye buffers.<ref name="nvidia2018">NVIDIA Developer. "Turing Multi-View Rendering in VRWorks." NVIDIA Technical Blog, 2018. https://developer.nvidia.com/blog/turing-multi-view-rendering-vrworks/</ref> Single-pass instanced approach uses GPU instancing with instance count of 2, where the [[vertex shader]] outputs positions for both views simultaneously. Example shader code: | ||

<pre> | |||

uniform EyeUniforms { | uniform EyeUniforms { | ||

mat4 mMatrix[2]; | mat4 mMatrix[2]; | ||

}; | }; | ||

vec4 pos = mMatrix[gl_InvocationID] * vertex; | vec4 pos = mMatrix[gl_InvocationID] * vertex; | ||

</pre> | |||

This technique halves draw call count compared to multi-pass, reducing CPU bottlenecks in complex scenes.<ref name="iquilez">Quilez, Inigo. "Stereo rendering." 2024. https://iquilezles.org/articles/stereo/</ref> | This technique halves draw call count compared to multi-pass, reducing CPU bottlenecks in complex scenes.<ref name="iquilez">Quilez, Inigo. "Stereo rendering." 2024. https://iquilezles.org/articles/stereo/</ref> | ||

| Line 299: | Line 155: | ||

[[NVIDIA]]'s [[Pascal (microarchitecture)|Pascal]] architecture introduced '''Simultaneous Multi-Projection (SMP)''' enabling true Single Pass Stereo where geometry processes once and projects to both eyes simultaneously using hardware acceleration.<ref name="anandtech2016">AnandTech. "Simultaneous Multi-Projection: Reusing Geometry on the Cheap." 2016. https://www.anandtech.com/show/10325/the-nvidia-geforce-gtx-1080-and-1070-founders-edition-review/11</ref> The [[Turing (microarchitecture)|Turing]] architecture expanded this to Multi-View Rendering supporting up to 4 projection views in a single pass. | [[NVIDIA]]'s [[Pascal (microarchitecture)|Pascal]] architecture introduced '''Simultaneous Multi-Projection (SMP)''' enabling true Single Pass Stereo where geometry processes once and projects to both eyes simultaneously using hardware acceleration.<ref name="anandtech2016">AnandTech. "Simultaneous Multi-Projection: Reusing Geometry on the Cheap." 2016. https://www.anandtech.com/show/10325/the-nvidia-geforce-gtx-1080-and-1070-founders-edition-review/11</ref> The [[Turing (microarchitecture)|Turing]] architecture expanded this to Multi-View Rendering supporting up to 4 projection views in a single pass. | ||

'''Lens Matched Shading''' divides each eye's view into 4 quadrants with adjusted projections approximating the barrel-distorted output shape after lens correction, reducing rendered pixels from 2.1 megapixels to 1.4 megapixels per | '''Lens Matched Shading''' divides each eye's view into 4 quadrants with adjusted projections approximating the barrel-distorted output shape after lens correction, reducing rendered pixels from 2.1 megapixels to 1.4 megapixels per eye, a 50% increase in available pixel shading throughput.<ref name="roadtovr2016">Road to VR. "NVIDIA Explains Pascal's 'Lens Matched Shading' for VR." 2016. https://www.roadtovr.com/nvidia-explains-pascal-simultaneous-multi-projection-lens-matched-shading-for-vr/</ref> | ||

=== Advanced Optimization Techniques === | === Advanced Optimization Techniques === | ||

| Line 344: | Line 200: | ||

|} | |} | ||

Modern VR rendering demands GPU capabilities significantly beyond traditional gaming.<ref name="computercity2024">ComputerCity. "VR PC Hardware Requirements: Minimum and Recommended Specs." 2024. https://computercity.com/hardware/vr/vr-pc-hardware-requirements</ref> To prevent [[simulation sickness]], VR applications must maintain consistently high frame | Modern VR rendering demands GPU capabilities significantly beyond traditional gaming.<ref name="computercity2024">ComputerCity. "VR PC Hardware Requirements: Minimum and Recommended Specs." 2024. https://computercity.com/hardware/vr/vr-pc-hardware-requirements</ref> To prevent [[simulation sickness]], VR applications must maintain consistently high frame rates, typically 90 frames per second or higher, and motion-to-photon latency under 20 milliseconds.<ref name="daqri2024">DAQRI. "Motion to Photon Latency in Mobile AR and VR." Medium, 2024. https://medium.com/@DAQRI/motion-to-photon-latency-in-mobile-ar-and-vr-99f82c480926</ref> | ||

== Display Technologies == | == Display Technologies == | ||

| Line 352: | Line 208: | ||

* '''[[Head-Mounted Display]]s (HMDs)''': Modern VR and AR headsets achieve perfect image separation using either two separate micro-displays (one for each eye) or a single display partitioned by optics. This direct-view approach completely isolates the left and right eye views, eliminating [[crosstalk]].<ref name="drawandcode"/> | * '''[[Head-Mounted Display]]s (HMDs)''': Modern VR and AR headsets achieve perfect image separation using either two separate micro-displays (one for each eye) or a single display partitioned by optics. This direct-view approach completely isolates the left and right eye views, eliminating [[crosstalk]].<ref name="drawandcode"/> | ||

* '''Color Filtering ([[Anaglyph 3D|Anaglyph]])''': Uses glasses with filters of different colors, typically red and cyan. Very inexpensive but suffers from severe color distortion and ghosting.<ref name="basic_principles"/> | * '''[[Color Filtering]] ([[Anaglyph 3D|Anaglyph]])''': Uses glasses with filters of different colors, typically red and cyan. Very inexpensive but suffers from severe color distortion and ghosting.<ref name="basic_principles"/> | ||

* '''[[Polarized 3D system|Polarization]]''': Uses glasses with differently polarized lenses. Linear polarization orients filters at 90 degrees; circular polarization uses opposite clockwise/counter-clockwise polarization. Commonly used in 3D cinemas.<ref name="palušová2023">Palušová, P. "Stereoscopy in Extended Reality: Utilizing Natural Binocular Disparity." 2023. https://www.petrapalusova.com/stereoscopy</ref> | * '''[[Polarized 3D system|Polarization]]''': Uses glasses with differently polarized lenses. Linear polarization orients filters at 90 degrees; circular polarization uses opposite clockwise/counter-clockwise polarization. Commonly used in 3D cinemas.<ref name="palušová2023">Palušová, P. "Stereoscopy in Extended Reality: Utilizing Natural Binocular Disparity." 2023. https://www.petrapalusova.com/stereoscopy</ref> | ||

* '''Time Multiplexing (Active Shutter)''': Display alternates between left and right images at high speed (120+ Hz). Viewer wears LCD shutter glasses synchronized to the display. Delivers full resolution to each eye.<ref name="basic_principles"/> | * '''[[Time Multiplexing]] (Active Shutter)''': Display alternates between left and right images at high speed (120+ Hz). Viewer wears LCD shutter glasses synchronized to the display. Delivers full resolution to each eye.<ref name="basic_principles"/> | ||

* '''[[Autostereoscopy]] (Glasses-Free 3D)''': Uses optical elements like [[parallax barrier]]s or [[lenticular lens]]es to direct different pixels to each eye. Limited by narrow optimal viewing angle.<ref name="palušová2023"/> | * '''[[Autostereoscopy]] (Glasses-Free 3D)''': Uses optical elements like [[parallax barrier]]s or [[lenticular lens]]es to direct different pixels to each eye. Limited by narrow optimal viewing angle.<ref name="palušová2023"/> | ||

| Line 364: | Line 220: | ||

=== Gaming === | === Gaming === | ||

Gaming dominates current VR usage with 48.3% of market revenue and 70% of VR users playing games regularly.<ref name="marketgrowth2024">Marketgrowthreports. "Virtual and Augmented Reality Industry Market Size, Trends 2033." 2024. https://www.marketgrowthreports.com/market-reports/virtual-and-augmented-reality-market-100490</ref> The depth cues from stereoscopic rendering prove essential for gameplay mechanics requiring accurate spatial | Gaming dominates current VR usage with 48.3% of market revenue and 70% of VR users playing games regularly.<ref name="marketgrowth2024">Marketgrowthreports. "Virtual and Augmented Reality Industry Market Size, Trends 2033." 2024. https://www.marketgrowthreports.com/market-reports/virtual-and-augmented-reality-market-100490</ref> The depth cues from stereoscopic rendering prove essential for gameplay mechanics requiring accurate spatial judgment, from grabbing objects in [[Beat Saber]] to navigating complex environments in [[Half-Life: Alyx]]. | ||

=== Healthcare === | === Healthcare === | ||

| Line 454: | Line 310: | ||

Stereoscopic rendering remains indispensable for VR and AR experiences requiring depth perception. The technique's evolution from mechanical stereoscopes to real-time GPU-accelerated rendering reflects advancing hardware capabilities and algorithmic innovations. Modern implementations reduce computational overhead by 30-70% compared to naive approaches, making immersive VR accessible on $300 standalone headsets rather than requiring $2000 gaming PCs. | Stereoscopic rendering remains indispensable for VR and AR experiences requiring depth perception. The technique's evolution from mechanical stereoscopes to real-time GPU-accelerated rendering reflects advancing hardware capabilities and algorithmic innovations. Modern implementations reduce computational overhead by 30-70% compared to naive approaches, making immersive VR accessible on $300 standalone headsets rather than requiring $2000 gaming PCs. | ||

The fundamental vergence-accommodation conflict represents a limitation of current display technology rather than stereoscopic rendering | The fundamental vergence-accommodation conflict represents a limitation of current display technology rather than stereoscopic rendering itself, one being actively solved through light field displays, holographic waveguides, and varifocal systems. The industry's convergence on OpenXR as a unified standard, combined with mature optimization techniques integrated into Unity and Unreal Engine, enables developers to target diverse platforms efficiently. The 171 million VR users in 2024 represent early adoption, with enterprise applications demonstrating stereoscopic rendering's value extends far beyond entertainment into training, healthcare, and industrial visualization. | ||

== See Also == | == See Also == | ||

| Line 516: | Line 372: | ||

</references> | </references> | ||

[[Category: | [[Category:Terms]] | ||

[[Category:Computer graphics]] | [[Category:Computer graphics]] | ||

[[Category:3D rendering]] | [[Category:3D rendering]] | ||

[[Category:Display technology]] | [[Category:Display technology]] | ||

[[Category:Human–computer interaction]] | [[Category:Human–computer interaction]] | ||

Latest revision as of 00:19, 28 October 2025

Stereoscopic rendering is the foundational computer graphics technique that creates the perception of three-dimensional depth in virtual reality (VR) and augmented reality (AR) systems by generating two slightly different images from distinct viewpoints corresponding to the left and right eyes.[1] This technique exploits binocular disparity, the horizontal displacement between corresponding points in the two images, enabling the visual cortex to reconstruct depth information through stereopsis, the same process human eyes use to perceive the real world.[2] By delivering two offset images (one per eye) that the brain combines into a single scene, stereoscopic rendering produces an illusion of depth that mimics natural binocular vision.[3]

The approach doubles computational requirements compared to traditional rendering but delivers the immersive depth perception that defines modern VR experiences, powering a $15.9 billion industry serving 171 million users worldwide as of 2024.[4] Unlike monoscopic imagery (showing the same image to both eyes), stereoscopic rendering presents each eye with a slightly different perspective, closely matching how humans view the real world and thereby greatly enhancing the sense of presence and realism in VR/AR.[5]

Fundamental Principles

How It Works

In stereoscopic rendering, a scene is captured or rendered from two viewpoints separated by roughly the distance between the human eyes (the interpupillary distance or IPD), typically calibrated to the average human IPD of 64mm (ranging from 54-74mm in adults).[6] Each viewpoint (often called the left-eye and right-eye camera) generates a 2D image of the scene. When these images are presented to the corresponding eyes of the user, the slight horizontal disparity between them is interpreted by the visual system as depth information through stereopsis.[7]

Modern VR headsets have two displays or a split display, one for each eye, and each display shows an image rendered from the appropriate perspective. Similarly, AR headsets (such as see-through head-mounted displays) project stereoscopic digital overlays so that virtual objects appear integrated into the real world with correct depth. The result is that the user perceives a unified 3D scene with depth, providing critical spatial awareness for interaction.[3]

Binocular Disparity and Parallax

The primary depth cue exploited by stereoscopic rendering is binocular disparity. Because the two virtual cameras are separated horizontally, objects in the 3D scene are projected onto different locations in the left and right images. This difference in projection is called parallax.[8]

- Positive Parallax (Uncrossed Disparity): Occurs when an object appears behind the display screen. The object's image is shifted to the left in the left eye's view and to the right in the right eye's view.

- Negative Parallax (Crossed Disparity): Occurs when an object appears in front of the display screen, "popping out" toward the viewer. The object's image is shifted to the right in the left eye's view and to the left in the right eye's view.

- Zero Parallax: Occurs when an object appears exactly at the depth of the display screen. The object's image is in the same position in both the left and right eye views. This plane is also known as the convergence plane or stereo window.

The magnitude of parallax for an object is inversely proportional to its distance from the cameras. The mathematical relationship between disparity and depth follows: Z = b×f/d, where Z is depth, b is baseline (interocular distance), f is focal length, and d is disparity.[9]

Convergence and Accommodation

In the real world, two functions of the eyes are perfectly synchronized:

- Vergence: The simultaneous movement of both eyes in opposite directions to obtain or maintain single binocular vision. When looking at a nearby object, the eyes rotate inward (converge).

- Accommodation: The process by which the eye's lens changes shape to focus light on the retina.

Stereoscopic displays create a conflict between these two systems, known as the Vergence-Accommodation Conflict (VAC).[10] The viewer's eyes must always accommodate (focus) on the fixed physical distance of the display screen, while their vergence system is directed to objects that appear at various depths within the virtual scene. This mismatch is a primary cause of visual fatigue and discomfort in VR and AR.[11]

Historical Evolution

| Year | Event | Relevance |

|---|---|---|

| 1838 | Charles Wheatstone invents stereoscope | Foundational demonstration of binocular depth perception |

| 1849 | David Brewster's lenticular stereoscope | First portable commercial stereoscopic device |

| 1939 | View-Master patented | Popular consumer stereoscopic viewer |

| 1956 | Morton Heilig's Sensorama | Multi-sensory stereoscopic experience machine |

| 1960 | Heilig's Telesphere Mask | First stereoscopic head-mounted display patent |

| 1968 | Ivan Sutherland's Sword of Damocles | First computer-generated stereoscopic VR display |

| 1972 | General Electric flight simulator | 180-degree stereoscopic views for training |

| 1980 | StereoGraphics stereo glasses | Electronic stereoscopic viewing for PCs |

| 1982 | Sega SubRoc-3D | First commercial stereoscopic video game |

| 1991 | Virtuality VR arcades | Real-time stereoscopic multiplayer VR |

| 1995 | Nintendo Virtual Boy | Portable stereoscopic gaming console |

| 2010 | Oculus Rift prototype | Modern stereoscopic HMD revival |

| 2016 | HTC Vive/Oculus Rift CV1 release | Consumer room-scale stereoscopic VR |

| 2023 | Apple Vision Pro | High-resolution stereoscopic mixed reality (70 pixels per degree) |

Early Mechanical Stereoscopy

The conceptual foundation emerged in 1838 when Sir Charles Wheatstone invented the first stereoscope using mirrors to present two offset images, formally describing binocular vision in a paper to the Royal Society, earning him the Royal Medal in 1840.[12] Sir David Brewster improved the design in 1849 with a lens-based portable stereoscope that became the first commercially successful stereoscopic device after the Great Exhibition of 1851.[12]

Computer Graphics Era

Computer-generated stereoscopy began with Ivan Sutherland's 1968 head-mounted display at Harvard University, nicknamed the "Sword of Damocles" due to its unwieldy overhead suspension system. This wireframe graphics prototype established the technical template, head tracking, stereoscopic displays, and real-time rendering, that would define VR development for decades.[13]

The gaming industry drove early consumer adoption with Sega's SubRoc-3D in 1982, the world's first commercial stereoscopic video game featuring an active shutter 3D system jointly developed with Matsushita.[14]

Modern VR Revolution

The modern VR revolution began with Palmer Luckey's 2012 Oculus Rift Kickstarter campaign, which raised $2.5 million. Facebook's $2 billion acquisition of Oculus in 2014 validated the market potential. The watershed 2016 launches of the Oculus Rift CV1 and HTC Vive, offering 2160×1200 combined resolution at 90Hz with room-scale tracking, established the technical baseline for modern VR.[15]

Mathematical Foundations

Perspective Projection

The core mathematics begins with perspective projection, where a 3D point projects onto a 2D image plane based on its depth. For a single viewpoint with center of projection at (0, 0, -d), the projected coordinates follow:

- xp = (x×d)/(d+z)

- yp = (y×d)/(d+z)

Stereoscopic rendering extends this with off-axis projection:[16]

- Left eye at (-e/2, 0, -d): xleft = (x×d - z×e/2)/(d+z)

- Right eye at (e/2, 0, -d): xright = (x×d + z×e/2)/(d+z)

Where e represents eye separation. The horizontal displacement varies with depth z, creating the disparity cues that enable stereopsis.

Asymmetric Frustum and Off-Axis Projection

The correct method for stereoscopic rendering is known as off-axis projection. It involves keeping the camera view vectors parallel and instead shearing the viewing frustum for each eye horizontally.[17] This avoids the vertical parallax issues that would be introduced by "toeing-in" the cameras.

The horizontal shift (s) for the frustum boundaries on the near plane is given by: s = (e/2) × (n/d)

Where:

- e is the eye separation (interaxial distance)

- n is the distance to the near clipping plane

- d is the convergence distance (the distance to the zero parallax plane)

This shift value modifies the left and right parameters of the frustum definition:

- For the left eye: left = left_original + s, right = right_original + s

- For the right eye: left = left_original - s, right = right_original - s

Graphics APIs like OpenGL provide functions such as `glFrustum(left, right, bottom, top, near, far)` that allow for the explicit definition of an asymmetric frustum.[18]

Rendering Techniques

Multi-Pass Rendering

Traditional multi-pass rendering takes the straightforward approach of rendering the complete scene twice sequentially, once per eye. Each eye uses separate camera parameters, performing independent draw calls, culling operations, and shader executions. While conceptually simple and compatible with all rendering pipelines, this approach imposes nearly 2× computational cost, doubling CPU overhead from draw call submission, duplicating geometry processing on the GPU, and requiring full iteration through all rendering stages twice.[19]

Single-Pass Stereo Rendering

Single-pass stereo rendering optimizes by traversing the scene graph once while rendering to both eye buffers.[20] Single-pass instanced approach uses GPU instancing with instance count of 2, where the vertex shader outputs positions for both views simultaneously. Example shader code:

uniform EyeUniforms {

mat4 mMatrix[2];

};

vec4 pos = mMatrix[gl_InvocationID] * vertex;

This technique halves draw call count compared to multi-pass, reducing CPU bottlenecks in complex scenes.[21]

Hardware-Accelerated Techniques

NVIDIA's Pascal architecture introduced Simultaneous Multi-Projection (SMP) enabling true Single Pass Stereo where geometry processes once and projects to both eyes simultaneously using hardware acceleration.[22] The Turing architecture expanded this to Multi-View Rendering supporting up to 4 projection views in a single pass.

Lens Matched Shading divides each eye's view into 4 quadrants with adjusted projections approximating the barrel-distorted output shape after lens correction, reducing rendered pixels from 2.1 megapixels to 1.4 megapixels per eye, a 50% increase in available pixel shading throughput.[23]

Advanced Optimization Techniques

Stereo Shading Reprojection

This technique developed by Oculus in 2017 reuses the image from one eye to help render the other eye's image. The scene is rendered normally for one eye, then the depth buffer information is used to extrapolate or reproject the image for the second eye's viewpoint. Any missing parts due to occluded areas are filled in with a secondary rendering pass. Oculus reported that in shader-heavy scenes this method can save substantial GPU work (approximately 20% in their tests).[24]

Foveated Rendering

Foveated rendering renders the small area corresponding to the user's fovea at full resolution while progressively reducing peripheral quality. Fixed foveated rendering (no eye tracking required) achieves 34-43% GPU savings on Meta Quest 2, while eye-tracked dynamic foveated rendering on PlayStation VR2 demonstrates approximately 72% savings.[25]

Asynchronous Reprojection

Technologies like Oculus's Asynchronous Spacewarp (ASW) and Asynchronous Timewarp do not directly reduce rendering cost but help maintain smooth output by re-projecting images if a new frame is late. This allows applications to render at lower framerates (45 FPS rendered doubled to 90 FPS displayed) without the user perceiving jitter.[26]

| Technique | Core Principle | CPU Overhead | GPU Overhead | Primary Advantage | Primary Disadvantage |

|---|---|---|---|---|---|

| Multi-Pass | Render entire scene twice sequentially | Very High | High | Simple implementation; maximum compatibility | Extremely inefficient; doubles CPU workload |

| Single-Pass (Double-Wide) | Render to double-width render target | Medium | High | Reduces render state changes | Still duplicates some work; largely deprecated |

| Single-Pass Instanced | Use GPU instancing with instance count=2 | Very Low | High | Drastically reduces CPU overhead | Requires GPU/API support (DirectX 11+) |

| Multiview | Single draw renders to multiple texture array slices | Very Low | High | Most efficient for mobile/standalone VR | Requires specific GPU/API support |

| Stereo Shading Reprojection | Reuse one eye's pixels for the other via depth | Low | Medium | 20% GPU savings in shader-heavy scenes | Can introduce artifacts; complex implementation |

Hardware Requirements

| Tier | GPU Examples | Use Case | Resolution Support | Performance Target |

|---|---|---|---|---|

| Minimum | GTX 1060 6GB, RX 480 | Baseline VR | 1080×1200 per eye @ 90Hz | Low settings |

| Recommended | RTX 2060, GTX 1070 | Comfortable VR | 1440×1600 per eye @ 90Hz | Medium settings |

| Premium | RTX 3070, RX 6800 | High-fidelity VR | 2160×2160 per eye @ 90Hz | High settings |

| Enthusiast | RTX 4090, RX 7900 XTX | Maximum quality | 4K+ per eye @ 120Hz+ | Ultra settings with ray tracing |

Modern VR rendering demands GPU capabilities significantly beyond traditional gaming.[27] To prevent simulation sickness, VR applications must maintain consistently high frame rates, typically 90 frames per second or higher, and motion-to-photon latency under 20 milliseconds.[28]

Display Technologies

Various technologies have been developed to present the stereoscopic image pair to viewers:

- Head-Mounted Displays (HMDs): Modern VR and AR headsets achieve perfect image separation using either two separate micro-displays (one for each eye) or a single display partitioned by optics. This direct-view approach completely isolates the left and right eye views, eliminating crosstalk.[3]

- Color Filtering (Anaglyph): Uses glasses with filters of different colors, typically red and cyan. Very inexpensive but suffers from severe color distortion and ghosting.[8]

- Polarization: Uses glasses with differently polarized lenses. Linear polarization orients filters at 90 degrees; circular polarization uses opposite clockwise/counter-clockwise polarization. Commonly used in 3D cinemas.[29]

- Time Multiplexing (Active Shutter): Display alternates between left and right images at high speed (120+ Hz). Viewer wears LCD shutter glasses synchronized to the display. Delivers full resolution to each eye.[8]

- Autostereoscopy (Glasses-Free 3D): Uses optical elements like parallax barriers or lenticular lenses to direct different pixels to each eye. Limited by narrow optimal viewing angle.[29]

Applications

Gaming

Gaming dominates current VR usage with 48.3% of market revenue and 70% of VR users playing games regularly.[30] The depth cues from stereoscopic rendering prove essential for gameplay mechanics requiring accurate spatial judgment, from grabbing objects in Beat Saber to navigating complex environments in Half-Life: Alyx.

Healthcare

Healthcare leads with 28.2% compound annual growth rate, the fastest-growing sector. Medical applications include:

- Surgical simulation and pre-surgical planning

- FDA-cleared VR therapies for pain management

- Mental health treatment for phobias and PTSD

- Medical student training with virtual cadavers

Medical students practice procedures in stereoscopic VR environments that provide depth perception critical for developing hand-eye coordination without requiring physical cadavers.[4]

Enterprise Training

Corporate training has achieved remarkable ROI with 67,000+ enterprises adopting VR-based training by 2024. Notable examples:

- Boeing reduced training hours by 75% using VR simulation

- Delta Air Lines increased technician proficiency by 5,000% through immersive maintenance training

- Break-even occurs at 375 learners; beyond 3,000 learners VR becomes 52% more cost-effective than traditional methods

Industrial Visualization

Industrial and manufacturing applications leverage stereoscopic rendering for product visualization, enabling engineers to examine CAD models at natural scale before physical prototyping. The AR/VR IoT manufacturing market projects $40-50 billion by 2025, with 75% of implementing companies reporting 10% operational efficiency improvements.

Augmented Reality

In AR, stereoscopic rendering is used in see-through devices to overlay 3D graphics into the user's view of the real world. Modern AR smartglasses like the Microsoft HoloLens and Magic Leap 2 have dual waveguide displays that project virtual images with slight differences to each eye, ensuring virtual objects appear at specific depths in the real environment.[29]

Industry Standards

OpenXR

The OpenXR standard, managed by the Khronos Group and finalized as version 1.0 in 2019, represents the VR/AR industry's convergence on a unified API.[31] OpenXR 1.1 includes stereo rendering with foveated rendering as a core capability, along with:

- Varjo's quad-view configuration for bionic displays

- Local floor coordinate spaces for mixed reality

- 13 new interaction profiles spanning controllers and styluses

Engine Support

Unity's stereo rendering paths include:[32]

- Multi-Pass: Separate pass per eye, most compatible

- Single-Pass: Double-wide render target, modest savings

- Single-Pass Instanced: GPU instancing with texture arrays, optimal on supported platforms

Unreal Engine provides instanced stereo rendering through project settings, delivering 50% draw call reduction once enabled.

Challenges and Solutions

Vergence-Accommodation Conflict

The vergence-accommodation conflict represents stereoscopic rendering's most fundamental limitation.[10] In natural vision, vergence (eye rotation) and accommodation (lens focusing) work in synchrony. Stereoscopic displays decouple these: vergence indicates virtual object distance based on binocular disparity, but accommodation remains fixed at the physical display distance (typically 2-3 meters for HMDs).

Current mitigation strategies:

- Software best practices: Keep virtual content >0.5 meters from user

- Avoid rapid depth changes

- Limit session duration for sensitive users

Emerging solutions:

- Light field displays: Reproduce the complete light field, allowing natural focus at different depths

- Varifocal displays: Physically or optically adjust focal distance based on eye tracking

- Holographic displays: Use wavefront reconstruction to create true 3D images

Latency and Motion-to-Photon Delay

Total system latency typically ranges 40-90ms, far exceeding the <20ms target required for presence. Asynchronous Time Warp (ATW) mitigates tracking latency by warping the last rendered frame using the latest head pose just before display refresh.[26]

Common Artifacts

- Crosstalk (Ghosting): Incomplete separation of left/right images

- Cardboarding: Objects appear flat due to insufficient interaxial distance

- Window Violations: Objects with negative parallax clipped by screen edges

- Vertical Parallax: Results from incorrect camera toe-in, causes immediate eye strain

Emerging Technologies

Light Field Displays

Light field displays represent the most promising solution to the vergence-accommodation conflict. CREAL, founded in 2017, has demonstrated functional prototypes progressing from table-top systems (2019) to AR glasses form factors (2024+).[33]

Neural Rendering

Neural Radiance Fields (NeRF) represent 3D scenes as continuous volumetric functions rather than discrete polygons. FoV-NeRF extends this for VR by incorporating human visual and stereo acuity, achieving 99% latency reduction compared to standard NeRF.[34]

Holographic Displays

Recent breakthroughs include Meta/Stanford research demonstrating full-color 3D holographic AR with metasurface waveguides in eyeglass-scale form factors, and NVIDIA's "Holographic Glasses" with 2.5mm optical stack thickness.

Future Trajectory

Stereoscopic rendering remains indispensable for VR and AR experiences requiring depth perception. The technique's evolution from mechanical stereoscopes to real-time GPU-accelerated rendering reflects advancing hardware capabilities and algorithmic innovations. Modern implementations reduce computational overhead by 30-70% compared to naive approaches, making immersive VR accessible on $300 standalone headsets rather than requiring $2000 gaming PCs.

The fundamental vergence-accommodation conflict represents a limitation of current display technology rather than stereoscopic rendering itself, one being actively solved through light field displays, holographic waveguides, and varifocal systems. The industry's convergence on OpenXR as a unified standard, combined with mature optimization techniques integrated into Unity and Unreal Engine, enables developers to target diverse platforms efficiently. The 171 million VR users in 2024 represent early adoption, with enterprise applications demonstrating stereoscopic rendering's value extends far beyond entertainment into training, healthcare, and industrial visualization.

See Also

- Virtual reality

- Augmented reality

- Stereoscopy

- Binocular vision

- Depth perception

- Foveated rendering

- Light field display

- Neural rendering

- OpenXR

- Vergence-accommodation conflict

- Head-mounted display

- Computer graphics pipeline

- Projection matrix

- GPU

- Parallax

- Stereoscopic 3D

- Interpupillary distance

- Asynchronous Spacewarp

- Asynchronous Timewarp

- VR SLI

References

- ↑ ARM Software. "Introduction to Stereo Rendering - VR SDK for Android." ARM Developer Documentation, 2021. https://arm-software.github.io/vr-sdk-for-android/IntroductionToStereoRendering.html

- ↑ Number Analytics. "Stereoscopy in VR: A Comprehensive Guide." 2024. https://www.numberanalytics.com/blog/ultimate-guide-stereoscopy-vr-ar-development

- ↑ 3.0 3.1 3.2 Draw & Code. "What Is Stereoscopic VR Technology." January 23, 2024. https://drawandcode.com/learning-zone/what-is-stereoscopic-vr-technology/

- ↑ 4.0 4.1 Mordor Intelligence. "Virtual Reality (VR) Market Size, Report, Share & Growth Trends 2025-2030." 2024. https://www.mordorintelligence.com/industry-reports/virtual-reality-market

- ↑ Boris FX. "Monoscopic vs Stereoscopic 360 VR: Key Differences." 2024. https://borisfx.com/blog/monoscopic-vs-stereoscopic-360-vr-key-differences/

- ↑ Afifi, Mahmoud. "Basics of stereoscopic imaging in virtual and augmented reality systems." Medium, 2020. https://medium.com/@mahmoudnafifi/basics-of-stereoscopic-imaging-6f69a7916cfd

- ↑ Wikipedia. "Depth perception." https://en.wikipedia.org/wiki/Depth_perception

- ↑ 8.0 8.1 8.2 Newcastle University. "Basic Principles of Stereoscopic 3D." 2024. https://www.ncl.ac.uk/

- ↑ Scratchapixel. "The Perspective and Orthographic Projection Matrix." 2024. https://www.scratchapixel.com/lessons/3d-basic-rendering/perspective-and-orthographic-projection-matrix/building-basic-perspective-projection-matrix.html

- ↑ 10.0 10.1 Wikipedia. "Vergence-accommodation conflict." https://en.wikipedia.org/wiki/Vergence-accommodation_conflict

- ↑ Packet39. "The Accommodation-Vergence conflict and how it affects your kids (and yourself)." 2017. https://packet39.com/blog/2017/12/25/the-accommodation-vergence-conflict-and-how-it-affects-your-kids-and-yourself/

- ↑ 12.0 12.1 Google Arts & Culture. "Stereoscopy: the birth of 3D technology." 2024. https://artsandculture.google.com/story/stereoscopy-the-birth-of-3d-technology-the-royal-society/pwWRTNS-hqDN5g

- ↑ Nextgeninvent. "Virtual Reality's Evolution From Science Fiction to Mainstream Technology." 2024. https://nextgeninvent.com/blogs/the-evolution-of-virtual-reality/

- ↑ ACM SIGGRAPH. "Remember Stereo 3D on the PC? Have You Ever Wondered What Happened to It?" 2024. https://blog.siggraph.org/2024/10/stereo-3d-pc-history-decline.html/

- ↑ Cavendishprofessionals. "The Evolution of VR and AR in Gaming: A Historical Perspective." 2024. https://www.cavendishprofessionals.com/the-evolution-of-vr-and-ar-in-gaming-a-historical-perspective/

- ↑ Song Ho Ahn. "OpenGL Projection Matrix." 2024. https://www.songho.ca/opengl/gl_projectionmatrix.html

- ↑ Bourke, P. "Stereoscopic Rendering." 2024. http://paulbourke.net/stereographics/stereorender/

- ↑ University of Utah. "Projection and View Frustums." Computer Graphics Course Material, 2024. https://my.eng.utah.edu/~cs6360/Lectures/frustum.pdf

- ↑ Unity. "How to maximize AR and VR performance with advanced stereo rendering." Unity Blog, 2024. https://blog.unity.com/technology/how-to-maximize-ar-and-vr-performance-with-advanced-stereo-rendering

- ↑ NVIDIA Developer. "Turing Multi-View Rendering in VRWorks." NVIDIA Technical Blog, 2018. https://developer.nvidia.com/blog/turing-multi-view-rendering-vrworks/

- ↑ Quilez, Inigo. "Stereo rendering." 2024. https://iquilezles.org/articles/stereo/

- ↑ AnandTech. "Simultaneous Multi-Projection: Reusing Geometry on the Cheap." 2016. https://www.anandtech.com/show/10325/the-nvidia-geforce-gtx-1080-and-1070-founders-edition-review/11

- ↑ Road to VR. "NVIDIA Explains Pascal's 'Lens Matched Shading' for VR." 2016. https://www.roadtovr.com/nvidia-explains-pascal-simultaneous-multi-projection-lens-matched-shading-for-vr/

- ↑ Meta for Developers. "Introducing Stereo Shading Reprojection." 2024. https://developers.meta.com/horizon/blog/introducing-stereo-shading-reprojection-for-unity/

- ↑ VRX. "What is Foveated Rendering? - VR Expert Blog." 2024. https://vrx.vr-expert.com/what-is-foveated-rendering-and-what-does-it-mean-for-vr/

- ↑ 26.0 26.1 Google. "Asynchronous Reprojection." Google VR Developers, 2024. https://developers.google.com/vr/discover/async-reprojection

- ↑ ComputerCity. "VR PC Hardware Requirements: Minimum and Recommended Specs." 2024. https://computercity.com/hardware/vr/vr-pc-hardware-requirements

- ↑ DAQRI. "Motion to Photon Latency in Mobile AR and VR." Medium, 2024. https://medium.com/@DAQRI/motion-to-photon-latency-in-mobile-ar-and-vr-99f82c480926

- ↑ 29.0 29.1 29.2 Palušová, P. "Stereoscopy in Extended Reality: Utilizing Natural Binocular Disparity." 2023. https://www.petrapalusova.com/stereoscopy

- ↑ Marketgrowthreports. "Virtual and Augmented Reality Industry Market Size, Trends 2033." 2024. https://www.marketgrowthreports.com/market-reports/virtual-and-augmented-reality-market-100490

- ↑ Khronos Group. "OpenXR - High-performance access to AR and VR." 2024. https://www.khronos.org/openxr/

- ↑ Unity. "Unity - Manual: Stereo rendering." Unity Documentation, 2024. https://docs.unity3d.com/Manual/SinglePassStereoRendering.html

- ↑ Road to VR. "Hands-on: CREAL's Light-field Display Brings a New Layer of Immersion to AR." 2024. https://www.roadtovr.com/creal-light-field-display-new-immersion-ar/

- ↑ arXiv. "Neural Rendering and Its Hardware Acceleration: A Review." 2024. https://arxiv.org/html/2402.00028v1