Hand tracking: Difference between revisions

Xinreality (talk | contribs) |

Xinreality (talk | contribs) m Text replacement - "e.g.," to "for example" Tags: Mobile edit Mobile web edit |

||

| (2 intermediate revisions by the same user not shown) | |||

| Line 15: | Line 15: | ||

'''Hand tracking''' is a [[computer vision]]-based technology used in [[virtual reality]] (VR), [[augmented reality]] (AR), and [[mixed reality]] (MR) systems to detect, track, and interpret the position, orientation, and movements of a user's hands and fingers in real time. Unlike traditional input methods such as [[motion controller|controllers]] or gloves, hand tracking enables controller-free, natural interactions by leveraging cameras, sensors, and artificial intelligence (AI) algorithms to map hand poses into virtual environments.<ref name="Frontiers2021" /> This technology enhances immersion, presence, and usability in [[extended reality]] (XR) applications by allowing users to perform gestures like pointing, grabbing, pinching, and swiping directly with their bare hands. | '''Hand tracking''' is a [[computer vision]]-based technology used in [[virtual reality]] (VR), [[augmented reality]] (AR), and [[mixed reality]] (MR) systems to detect, track, and interpret the position, orientation, and movements of a user's hands and fingers in real time. Unlike traditional input methods such as [[motion controller|controllers]] or gloves, hand tracking enables controller-free, natural interactions by leveraging cameras, sensors, and artificial intelligence (AI) algorithms to map hand poses into virtual environments.<ref name="Frontiers2021" /> This technology enhances immersion, presence, and usability in [[extended reality]] (XR) applications by allowing users to perform gestures like pointing, grabbing, pinching, and swiping directly with their bare hands. | ||

Hand tracking systems typically operate using optical methods, such as [[infrared]] (IR) illumination and monochrome cameras, or visible-light cameras integrated into [[head-mounted display]]s (HMDs). Modern implementations achieve low-latency tracking ( | Hand tracking systems typically operate using optical methods, such as [[infrared]] (IR) illumination and monochrome cameras, or visible-light cameras integrated into [[head-mounted display]]s (HMDs). Modern implementations achieve low-latency tracking (for example 10–70 ms) with high accuracy, supporting up to 27 degrees of freedom (DoF) per hand to capture complex articulations.<ref name="UltraleapDocs" /> The human hand has approximately 27 degrees of freedom, making accurate tracking a complex challenge.<ref name="HandDoF" /> It has evolved from early wired prototypes in the 1970s to sophisticated, software-driven solutions integrated into consumer devices like the [[Meta Quest]] series, [[Microsoft HoloLens 2]], and [[Apple Vision Pro]]. | ||

Hand tracking is a cornerstone of [[human-computer interaction]] in [[spatial computing]]. Modern systems commonly provide a per-hand skeletal pose ( | Hand tracking is a cornerstone of [[human-computer interaction]] in [[spatial computing]]. Modern systems commonly provide a per-hand skeletal pose (for example joints and bones), expose this data to applications through standard APIs (such as [[OpenXR]] and [[WebXR]]), and pair it with higher-level interaction components (for example poke, grab, raycast) for robust user experiences across devices.<ref name="OpenXR11" /><ref name="WebXRHand" /> | ||

== History == | == History == | ||

| Line 23: | Line 23: | ||

=== Early Developments (1970s–1990s) === | === Early Developments (1970s–1990s) === | ||

The foundational milestone occurred in 1977 with the invention of the '''[[Sayre Glove]]''', a wired data glove developed by electronic visualization pioneer Daniel Sandin and computer graphics researcher Thomas DeFanti at the University of Illinois at Chicago's Electronic Visualization Laboratory (EVL). Inspired by an idea from colleague Rich Sayre, the glove used optical flex | The foundational milestone occurred in 1977 with the invention of the '''[[Sayre Glove]]''', a wired data glove developed by electronic visualization pioneer Daniel Sandin and computer graphics researcher Thomas DeFanti at the University of Illinois at Chicago's Electronic Visualization Laboratory (EVL). Inspired by an idea from colleague Rich Sayre, the glove used optical flex sensors, light emitters paired with photocells embedded in the fingers, to measure joint angles and finger bends. Light intensity variations were converted into electrical signals, enabling basic gesture recognition and hand posture tracking for early VR simulations.<ref name="SayreGlove" /><ref name="SenseGlove" /> This device, considered the first data glove, established the principle of measuring finger flexion for computer input. | ||

In 1983, Gary Grimes of Bell Labs developed the '''[[Digital Data Entry Glove]]''', a more sophisticated system patented as an alternative to keyboard input. This device integrated flex sensors, touch sensors, and tilt sensors to recognize unique hand positions corresponding to alphanumeric characters, specifically gestures from the American Sign Language manual alphabet.<ref name="BellGlove" /> | In 1983, Gary Grimes of Bell Labs developed the '''[[Digital Data Entry Glove]]''', a more sophisticated system patented as an alternative to keyboard input. This device integrated flex sensors, touch sensors, and tilt sensors to recognize unique hand positions corresponding to alphanumeric characters, specifically gestures from the American Sign Language manual alphabet.<ref name="BellGlove" /> | ||

| Line 50: | Line 50: | ||

=== 2000s: Sensor Fusion and Early Commercialization === | === 2000s: Sensor Fusion and Early Commercialization === | ||

The 2000s saw the convergence of hardware and software for multi-modal tracking. External devices like data gloves with fiber-optic sensors ( | The 2000s saw the convergence of hardware and software for multi-modal tracking. External devices like data gloves with fiber-optic sensors (for example Fifth Dimension Technologies' 5DT Glove) combined bend sensors with IMUs to capture 3D hand poses. Software frameworks began processing fused data for virtual hand avatars. However, these remained bulky and controller-dependent, with limited adoption outside research labs.<ref name="VirtualSpeech" /> | ||

In the late 1990s and early 2000s, camera-based gesture recognition began to be explored outside of | In the late 1990s and early 2000s, camera-based gesture recognition began to be explored outside of VR, for instance, computer vision researchers worked on interpreting hand signs for sign language or basic gesture control of computers. However, real-time markerless hand tracking in 3D was extremely challenging with the processing power then available. | ||

=== 2010s: Optical Tracking and Controller-Free Era === | === 2010s: Optical Tracking and Controller-Free Era === | ||

| Line 76: | Line 76: | ||

In the 2020s, hand tracking became an expected feature in many XR devices. An analysis by SpectreXR noted that the percentage of new VR devices supporting hand tracking jumped from around 21% in 2021 to 46% in 2022, as more manufacturers integrated the technology.<ref name="SpectreXR2023" /> At the same time, the cost barrier dropped dramatically, with the average price of hand-tracking-capable VR headsets falling by approximately 93% from 2021 to 2022, making the technology far more accessible.<ref name="SpectreXR2023" /> | In the 2020s, hand tracking became an expected feature in many XR devices. An analysis by SpectreXR noted that the percentage of new VR devices supporting hand tracking jumped from around 21% in 2021 to 46% in 2022, as more manufacturers integrated the technology.<ref name="SpectreXR2023" /> At the same time, the cost barrier dropped dramatically, with the average price of hand-tracking-capable VR headsets falling by approximately 93% from 2021 to 2022, making the technology far more accessible.<ref name="SpectreXR2023" /> | ||

Another milestone was Apple's introduction of the '''[[Apple Vision Pro]]''' (released 2024), which relies on hand tracking along with [[eye tracking]] as the primary input method for a spatial computer, completely doing away with handheld controllers. Apple's implementation allows users to make micro-gestures like pinching fingers at waist level, tracked by downward-facing cameras, | Another milestone was Apple's introduction of the '''[[Apple Vision Pro]]''' (released 2024), which relies on hand tracking along with [[eye tracking]] as the primary input method for a spatial computer, completely doing away with handheld controllers. Apple's implementation allows users to make micro-gestures like pinching fingers at waist level, tracked by downward-facing cameras, which, combined with eye gaze, lets users control the interface in a very effortless manner.<ref name="AppleGestures" /><ref name="UploadVR2023" /> This high-profile adoption has been seen as a strong endorsement of hand tracking for mainstream XR interaction. | ||

By 2025, hand tracking is standard in many XR devices, with latencies under 70 ms and applications spanning gaming to medical simulations. | By 2025, hand tracking is standard in many XR devices, with latencies under 70 ms and applications spanning gaming to medical simulations. | ||

| Line 112: | Line 112: | ||

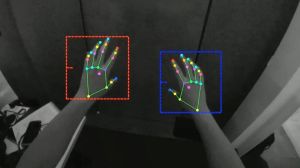

A common approach is using one or more infrared or RGB cameras to visually capture the hands and then employing computer vision algorithms to recognize the hand's pose (the positions of the palm and each finger joint) in 3D space. Advanced [[machine learning]] models are often trained to detect keypoints of the hand (such as knuckle and fingertip positions) from the camera images, reconstructing an articulated hand model that updates as the user moves. A typical pipeline includes: | A common approach is using one or more infrared or RGB cameras to visually capture the hands and then employing computer vision algorithms to recognize the hand's pose (the positions of the palm and each finger joint) in 3D space. Advanced [[machine learning]] models are often trained to detect keypoints of the hand (such as knuckle and fingertip positions) from the camera images, reconstructing an articulated hand model that updates as the user moves. A typical pipeline includes: | ||

#'''Detection''': Find hands in the camera frame (often with a palm detector) | |||

#'''Landmark regression''': Predict 2D/3D keypoints for wrist and finger joints (commonly 21 landmarks per hand in widely used models)<ref name="MediaPipeHands" /> | |||

#'''Pose / mesh estimation''': Fit a kinematic skeleton or hand mesh consistent with human biomechanics for stable interaction and animation | |||

#'''Temporal smoothing & prediction''': Filter jitter and manage short occlusions for responsive feedback | |||

This positional data is then provided to the VR/AR system (often through standard interfaces like [[OpenXR]]) so that applications can respond to the user's hand gestures and contacts with virtual objects. Google's MediaPipe Hands, for example, infers 21 3D landmarks per hand from a single RGB frame and runs in real time on mobile-class hardware, illustrating the efficiency of modern approaches.<ref name="MediaPipeHands" /> | This positional data is then provided to the VR/AR system (often through standard interfaces like [[OpenXR]]) so that applications can respond to the user's hand gestures and contacts with virtual objects. Google's MediaPipe Hands, for example, infers 21 3D landmarks per hand from a single RGB frame and runs in real time on mobile-class hardware, illustrating the efficiency of modern approaches.<ref name="MediaPipeHands" /> | ||

| Line 129: | Line 129: | ||

Some systems augment or replace optical tracking with active depth sensing such as [[LiDAR]] or structured light infrared systems. These emit light (laser or IR LED) and measure its reflection to more precisely determine the distance and shape of hands, even in low-light conditions. LiDAR-based hand tracking can capture 3D positions with high precision and is less affected by ambient lighting or distance than pure camera-based methods.<ref name="VRExpert2023" /> | Some systems augment or replace optical tracking with active depth sensing such as [[LiDAR]] or structured light infrared systems. These emit light (laser or IR LED) and measure its reflection to more precisely determine the distance and shape of hands, even in low-light conditions. LiDAR-based hand tracking can capture 3D positions with high precision and is less affected by ambient lighting or distance than pure camera-based methods.<ref name="VRExpert2023" /> | ||

Ultraleap's hand tracking module ( | Ultraleap's hand tracking module (for example the Stereo IR 170 sensor) projects IR light and uses two IR cameras to track hands in 3D, allowing for robust tracking under various lighting conditions. This module has been integrated into devices like the Varjo VR-3/XR-3 and certain [[Pico]] headsets to provide built-in hand tracking.<ref name="SoundxVision" /><ref name="VRExpert2023" /> Active depth systems (for example [[time-of-flight camera|Time-of-Flight]] or [[structured light]]) project or emit IR to recover per-pixel depth, improving robustness in low light and during complex hand poses. Several headsets integrate IR illumination to make hands stand out for monochrome sensors. Some [[mixed reality]] devices also include dedicated scene depth sensors that aid perception and interaction. | ||

Optical hand tracking is generally affordable to implement since it can leverage the same camera hardware used for environment tracking or passthrough video. However, its performance can be affected by the cameras' field of view, lighting conditions, and frame rate. If the user's hands move outside the view of the cameras or lighting is poor, tracking quality will suffer. Improvements in computer vision and AI have steadily increased the accuracy and robustness of optical hand tracking, enabling features like two-hand interactions and fine finger gesture detection.<ref name="VRExpert2023" /> | Optical hand tracking is generally affordable to implement since it can leverage the same camera hardware used for environment tracking or passthrough video. However, its performance can be affected by the cameras' field of view, lighting conditions, and frame rate. If the user's hands move outside the view of the cameras or lighting is poor, tracking quality will suffer. Improvements in computer vision and AI have steadily increased the accuracy and robustness of optical hand tracking, enabling features like two-hand interactions and fine finger gesture detection.<ref name="VRExpert2023" /> | ||

| Line 141: | Line 141: | ||

* '''[[OpenXR]]''': A cross-vendor API from the Khronos Group. Version 1.1 (April 2024) consolidated hand tracking into the core specification, folding common extensions and providing standardized hand-tracking data structures and joint hierarchies across devices, easing portability for developers. The XR_EXT_hand_tracking extension provides 26 joint locations with standardized hierarchy.<ref name="OpenXR11" /> | * '''[[OpenXR]]''': A cross-vendor API from the Khronos Group. Version 1.1 (April 2024) consolidated hand tracking into the core specification, folding common extensions and providing standardized hand-tracking data structures and joint hierarchies across devices, easing portability for developers. The XR_EXT_hand_tracking extension provides 26 joint locations with standardized hierarchy.<ref name="OpenXR11" /> | ||

* '''[[WebXR]] Hand Input Module''' (W3C): The Level 1 specification represents the W3C standard for browser-based hand tracking, enabling web applications to access articulated hand pose data ( | * '''[[WebXR]] Hand Input Module''' (W3C): The Level 1 specification represents the W3C standard for browser-based hand tracking, enabling web applications to access articulated hand pose data (for example joint poses) so web apps can implement hands-first interaction.<ref name="WebXRHand" /> | ||

== Notable Platforms == | == Notable Platforms == | ||

| Line 153: | Line 153: | ||

| [[Apple Vision Pro]] || Multi-camera, IR illumination, [[LiDAR]] scene sensing; eye-hand fusion || "Look to target, pinch to select", flick to scroll; relaxed, low-effort micro-gestures || Hand + eye as primary input paradigm in visionOS<ref name="AppleGestures" /> | | [[Apple Vision Pro]] || Multi-camera, IR illumination, [[LiDAR]] scene sensing; eye-hand fusion || "Look to target, pinch to select", flick to scroll; relaxed, low-effort micro-gestures || Hand + eye as primary input paradigm in visionOS<ref name="AppleGestures" /> | ||

|- | |- | ||

| [[Ultraleap]] modules ( | | [[Ultraleap]] modules (for example Controller 2, Stereo IR) || Stereo IR + LEDs; skeletal model || Robust two-hand support; integrations for Unity/Unreal/OpenXR || Widely embedded in enterprise headsets (for example Varjo XR-3/VR-3)<ref name="UltraleapDocs" /><ref name="VarjoUltraleap" /> | ||

|} | |} | ||

| Line 169: | Line 169: | ||

=== [[Ray-Based Selection]] (Indirect Interaction) === | === [[Ray-Based Selection]] (Indirect Interaction) === | ||

For distant objects beyond arm's reach, a virtual ray (from palm, fingertip, or index direction) targets distant UI elements. Users perform a gesture ( | For distant objects beyond arm's reach, a virtual ray (from palm, fingertip, or index direction) targets distant UI elements. Users perform a gesture (for example pinch) to activate or select the targeted item. This allows interaction with objects throughout the virtual environment without physical reach limitations. | ||

=== Multimodal Interaction === | === Multimodal Interaction === | ||

Combining hand tracking with other inputs enhances interaction: | Combining hand tracking with other inputs enhances interaction: | ||

* '''[[Gaze-and-pinch]]''' ([[Apple Vision Pro]]): [[Eye tracking]] rapidly targets UI elements, while a subtle pinch gesture confirms selection. This is the primary paradigm on [[Apple Vision Pro]], allowing control without holding up hands | * '''[[Gaze-and-pinch]]''' ([[Apple Vision Pro]]): [[Eye tracking]] rapidly targets UI elements, while a subtle pinch gesture confirms selection. This is the primary paradigm on [[Apple Vision Pro]], allowing control without holding up hands constantly, a brief pinch at waist level suffices.<ref name="AppleGestures" /><ref name="UploadVR2023" /> | ||

* '''[[Voice]] and [[gesture]]''': Verbal commands with hand confirmation | * '''[[Voice]] and [[gesture]]''': Verbal commands with hand confirmation | ||

* '''Hybrid controller/hands''': Seamless switching between modalities | * '''Hybrid controller/hands''': Seamless switching between modalities | ||

=== Gesture Commands === | === Gesture Commands === | ||

Beyond direct object manipulation, hand tracking can facilitate recognition of symbolic gestures that act as commands. This is analogous to how touchscreens support multi-touch gestures (pinch to zoom, swipe to scroll). In XR, certain hand poses or movements can trigger | Beyond direct object manipulation, hand tracking can facilitate recognition of symbolic gestures that act as commands. This is analogous to how touchscreens support multi-touch gestures (pinch to zoom, swipe to scroll). In XR, certain hand poses or movements can trigger actions, for example, making a pinching motion can act as a click or selection, a thumbs-up might trigger an event, or specific sign language gestures could be interpreted as system commands. | ||

=== User Interface Navigation === | === User Interface Navigation === | ||

| Line 188: | Line 188: | ||

* '''System UI & Productivity''': Controller-free navigation, window management, and typing/pointing surrogates in spatial desktops. Natural file manipulation, multitasking across virtual screens, and interface control without handheld devices.<ref name="AppleGestures" /> | * '''System UI & Productivity''': Controller-free navigation, window management, and typing/pointing surrogates in spatial desktops. Natural file manipulation, multitasking across virtual screens, and interface control without handheld devices.<ref name="AppleGestures" /> | ||

* '''Gaming & Entertainment''': Titles such as ''Hand Physics Lab'' showcase free-hand puzzles and physics interactions using optical hand tracking on Quest.<ref name="HPL_RoadToVR" /> Games and creative applications use hand | * '''Gaming & Entertainment''': Titles such as ''Hand Physics Lab'' showcase free-hand puzzles and physics interactions using optical hand tracking on Quest.<ref name="HPL_RoadToVR" /> Games and creative applications use hand interactions, for example a puzzle game might let the player literally reach out and grab puzzle pieces in VR, or users can play virtual piano or create pottery simulations. | ||

* '''Training & Simulation''': Natural hand use improves ecological validity for assembly, maintenance, and surgical rehearsal in enterprise, medical, and industrial contexts.<ref name="Frontiers2021" /> Workers can practice complex procedures in safe virtual environments, developing muscle memory that transfers to real-world tasks. | * '''Training & Simulation''': Natural hand use improves ecological validity for assembly, maintenance, and surgical rehearsal in enterprise, medical, and industrial contexts.<ref name="Frontiers2021" /> Workers can practice complex procedures in safe virtual environments, developing muscle memory that transfers to real-world tasks. | ||

| Line 194: | Line 194: | ||

* '''Social and Collaborative VR''': In multi-user virtual environments, hand tracking enhances communication and embodiment. Subtle hand motions and finger movements can be transmitted to one's avatar, allowing for richer non-verbal communication such as waving, pointing things out to others, or performing shared gestures. This mirrors real life and can make remote collaboration or socializing feel more natural.<ref name="VarjoSupport" /> | * '''Social and Collaborative VR''': In multi-user virtual environments, hand tracking enhances communication and embodiment. Subtle hand motions and finger movements can be transmitted to one's avatar, allowing for richer non-verbal communication such as waving, pointing things out to others, or performing shared gestures. This mirrors real life and can make remote collaboration or socializing feel more natural.<ref name="VarjoSupport" /> | ||

* '''Accessibility & Rehabilitation''': Because hand tracking removes the need to hold controllers, it can make VR and AR more accessible to people who may not be able to use standard game controllers. Users with certain physical disabilities or limited dexterity might find hand gestures easier. In addition, the technology has been explored for rehabilitation | * '''Accessibility & Rehabilitation''': Because hand tracking removes the need to hold controllers, it can make VR and AR more accessible to people who may not be able to use standard game controllers. Users with certain physical disabilities or limited dexterity might find hand gestures easier. In addition, the technology has been explored for rehabilitation exercises, for example, stroke patients could do guided therapy in VR using their hands to perform tasks and regain motor function, with the system tracking their movements and providing feedback. Reduces dependence on handheld controllers in shared or constrained environments.<ref name="Frontiers2021" /> | ||

* '''Healthcare & Medical''': AR HUD (heads-up display) interactions in medical contexts allow surgeons to manipulate virtual panels without touching anything physically, maintaining sterile fields. Medical training simulations benefit from realistic hand interactions. | * '''Healthcare & Medical''': AR HUD (heads-up display) interactions in medical contexts allow surgeons to manipulate virtual panels without touching anything physically, maintaining sterile fields. Medical training simulations benefit from realistic hand interactions. | ||

| Line 209: | Line 209: | ||

* On '''[[Microsoft HoloLens 2]]''', a 2024 study comparing to a Vicon motion-capture reference found millimeter-scale fingertip errors (approximately 2-4 mm) in a tracing task, with good agreement for pinch span and many grasping joint angles.<ref name="HL2Accuracy" /> | * On '''[[Microsoft HoloLens 2]]''', a 2024 study comparing to a Vicon motion-capture reference found millimeter-scale fingertip errors (approximately 2-4 mm) in a tracing task, with good agreement for pinch span and many grasping joint angles.<ref name="HL2Accuracy" /> | ||

Real-world performance also depends on lighting, hand pose, occlusions ( | Real-world performance also depends on lighting, hand pose, occlusions (for example fingers hidden by other fingers), camera field of view, and motion speed. Runtime predictors reduce jitter and tracking loss but cannot eliminate these effects entirely.<ref name="Frontiers2021" /><ref name="MetaHands21" /> | ||

== Advantages == | == Advantages == | ||

| Line 218: | Line 218: | ||

* '''Enhanced Immersion''': Removing intermediary devices (like controllers or wands) can increase presence. When users see their virtual hands mimicking their every finger wiggle, it reinforces the illusion that they are "inside" the virtual environment. The continuity between real and virtual actions (especially in MR, where users literally see their physical hands interacting with digital objects) can be compelling. | * '''Enhanced Immersion''': Removing intermediary devices (like controllers or wands) can increase presence. When users see their virtual hands mimicking their every finger wiggle, it reinforces the illusion that they are "inside" the virtual environment. The continuity between real and virtual actions (especially in MR, where users literally see their physical hands interacting with digital objects) can be compelling. | ||

* '''Expressiveness''': Hands allow a wide range of gesture expressions. In contrast to a limited set of controller buttons, hand tracking can capture nuanced movements. This enables richer interactions (such as sculpting a 3D model with complex hand movements) and communication (subtle social gestures, sign language, etc.). Important for social | * '''Expressiveness''': Hands allow a wide range of gesture expressions. In contrast to a limited set of controller buttons, hand tracking can capture nuanced movements. This enables richer interactions (such as sculpting a 3D model with complex hand movements) and communication (subtle social gestures, sign language, etc.). Important for social presence, waving, pointing, subtle finger cues enhance non-verbal communication. | ||

* '''Hygiene & Convenience''': Especially in public or shared XR setups, hand tracking can be advantageous since users do not need to touch common surfaces or devices. Touchless interfaces have gained appeal for reducing contact points. Moreover, not having to pick up or hold hardware means quicker setup and freedom to use one's hands spontaneously ( | * '''Hygiene & Convenience''': Especially in public or shared XR setups, hand tracking can be advantageous since users do not need to touch common surfaces or devices. Touchless interfaces have gained appeal for reducing contact points. Moreover, not having to pick up or hold hardware means quicker setup and freedom to use one's hands spontaneously (for example switching between real objects and virtual interface by just moving hands). No shared controllers required; quicker task switching between physical tools and virtual UI. | ||

== Challenges and Limitations == | == Challenges and Limitations == | ||

| Line 226: | Line 226: | ||

=== Technical Limitations === | === Technical Limitations === | ||

* '''Occlusion & Field of View''': Self-occluding poses ( | * '''Occlusion & Field of View''': Self-occluding poses (for example fists, crossed fingers) and hands leaving camera FOV can cause tracking loss. Predictive tracking mitigates but cannot remove this. Ensuring that hand tracking works in all conditions is difficult. Optical systems can struggle with poor lighting, motion blur from fast hand movements, or when the hands leave the camera's field of view (for example reaching behind one's back). Even depth cameras have trouble if the sensors are occluded or if reflective surfaces confuse the measurements.<ref name="Frontiers2021" /><ref name="MediaPipeHands" /> | ||

* '''Latency & Fast Motion''': Even 70 ms delay can feel disconnected. Fast motion burdens mobile compute. Continuous updates ( | * '''Latency & Fast Motion''': Even 70 ms delay can feel disconnected. Fast motion burdens mobile compute. Continuous updates (for example Quest "Hands 2.x") have narrowed gaps to controllers but not eliminated them. There can also be a slight latency in hand tracking responses due to processing, which, if not minimized, can affect user performance.<ref name="MetaHands22" /> | ||

* '''Lighting & Reflectance Sensitivity''': Purely optical methods remain sensitive to extreme lighting conditions and reflective surfaces, though IR illumination helps.<ref name="Frontiers2021" /> | * '''Lighting & Reflectance Sensitivity''': Purely optical methods remain sensitive to extreme lighting conditions and reflective surfaces, though IR illumination helps.<ref name="Frontiers2021" /> | ||

| Line 260: | Line 260: | ||

=== Neural Networks for Better Prediction === | === Neural Networks for Better Prediction === | ||

There is active research into using neural networks for better prediction of occluded or fast movements, and into augmenting hand tracking with other sensors (for example, using | There is active research into using neural networks for better prediction of occluded or fast movements, and into augmenting hand tracking with other sensors (for example, using electromyography, reading muscle signals in the forearm, to detect finger movements even before they are visible). All these efforts point toward making hand-based interaction more seamless, reliable, and richly interactive in the coming years. | ||

=== Market Projections === | === Market Projections === | ||

| Line 306: | Line 306: | ||

<ref name="Orion">TechNewsWorld – "Leap Motion Unleashes Orion" (2016-02-18). URL: https://www.technewsworld.com/story/leap-motion-unleashes-orion-83129.html</ref> | <ref name="Orion">TechNewsWorld – "Leap Motion Unleashes Orion" (2016-02-18). URL: https://www.technewsworld.com/story/leap-motion-unleashes-orion-83129.html</ref> | ||

<ref name="UltrahapticsAcq">The Verge – "Hand-tracking startup Leap Motion reportedly acquired by UltraHaptics" (2019-05-30). URL: https://www.theverge.com/2019/5/30/18645604/leap-motion-vr-hand-tracking-ultrahaptics-acquisition-rumor</ref> | <ref name="UltrahapticsAcq">The Verge – "Hand-tracking startup Leap Motion reportedly acquired by UltraHaptics" (2019-05-30). URL: https://www.theverge.com/2019/5/30/18645604/leap-motion-vr-hand-tracking-ultrahaptics-acquisition-rumor</ref> | ||

<ref name="Meta2019">Meta – "Introducing Hand Tracking on Oculus | <ref name="Meta2019">Meta – "Introducing Hand Tracking on Oculus Quest-Bringing Your Real Hands into VR" (2019). URL: https://www.meta.com/blog/introducing-hand-tracking-on-oculus-quest-bringing-your-real-hands-into-vr/</ref> | ||

<ref name="SpectreXR2022">SpectreXR Blog – "Brief History of Hand Tracking in Virtual Reality" (Sept 7, 2022). URL: https://spectrexr.io/blog/news/brief-history-of-hand-tracking-in-virtual-reality</ref> | <ref name="SpectreXR2022">SpectreXR Blog – "Brief History of Hand Tracking in Virtual Reality" (Sept 7, 2022). URL: https://spectrexr.io/blog/news/brief-history-of-hand-tracking-in-virtual-reality</ref> | ||

<ref name="Develop3D2019">Develop3D – "First Look at HoloLens 2" (Dec 20, 2019). URL: https://develop3d.com/reviews/first-look-hololens-2-microsoft-mixed-reality-visualisation-hmd/</ref> | <ref name="Develop3D2019">Develop3D – "First Look at HoloLens 2" (Dec 20, 2019). URL: https://develop3d.com/reviews/first-look-hololens-2-microsoft-mixed-reality-visualisation-hmd/</ref> | ||

Latest revision as of 00:29, 28 October 2025

Hand tracking is a computer vision-based technology used in virtual reality (VR), augmented reality (AR), and mixed reality (MR) systems to detect, track, and interpret the position, orientation, and movements of a user's hands and fingers in real time. Unlike traditional input methods such as controllers or gloves, hand tracking enables controller-free, natural interactions by leveraging cameras, sensors, and artificial intelligence (AI) algorithms to map hand poses into virtual environments.[1] This technology enhances immersion, presence, and usability in extended reality (XR) applications by allowing users to perform gestures like pointing, grabbing, pinching, and swiping directly with their bare hands.

Hand tracking systems typically operate using optical methods, such as infrared (IR) illumination and monochrome cameras, or visible-light cameras integrated into head-mounted displays (HMDs). Modern implementations achieve low-latency tracking (for example 10–70 ms) with high accuracy, supporting up to 27 degrees of freedom (DoF) per hand to capture complex articulations.[2] The human hand has approximately 27 degrees of freedom, making accurate tracking a complex challenge.[3] It has evolved from early wired prototypes in the 1970s to sophisticated, software-driven solutions integrated into consumer devices like the Meta Quest series, Microsoft HoloLens 2, and Apple Vision Pro.

Hand tracking is a cornerstone of human-computer interaction in spatial computing. Modern systems commonly provide a per-hand skeletal pose (for example joints and bones), expose this data to applications through standard APIs (such as OpenXR and WebXR), and pair it with higher-level interaction components (for example poke, grab, raycast) for robust user experiences across devices.[4][5]

History

The development of hand tracking in VR and AR traces back to early experiments in immersive computing during the 1970s, when researchers sought natural input methods beyond keyboards and mice. Key milestones reflect a progression from mechanical gloves to optical sensor-based systems, driven by advances in computing power, AI, and sensor miniaturization.

Early Developments (1970s–1990s)

The foundational milestone occurred in 1977 with the invention of the Sayre Glove, a wired data glove developed by electronic visualization pioneer Daniel Sandin and computer graphics researcher Thomas DeFanti at the University of Illinois at Chicago's Electronic Visualization Laboratory (EVL). Inspired by an idea from colleague Rich Sayre, the glove used optical flex sensors, light emitters paired with photocells embedded in the fingers, to measure joint angles and finger bends. Light intensity variations were converted into electrical signals, enabling basic gesture recognition and hand posture tracking for early VR simulations.[6][7] This device, considered the first data glove, established the principle of measuring finger flexion for computer input.

In 1983, Gary Grimes of Bell Labs developed the Digital Data Entry Glove, a more sophisticated system patented as an alternative to keyboard input. This device integrated flex sensors, touch sensors, and tilt sensors to recognize unique hand positions corresponding to alphanumeric characters, specifically gestures from the American Sign Language manual alphabet.[8]

The 1980s saw the emergence of the first commercially viable data gloves, largely driven by the work of Thomas Zimmerman and Jaron Lanier. Zimmerman patented an optical flex sensor in 1982 and later co-founded VPL Research with Lanier in 1985. VPL Research became the first company to sell VR hardware, including the iconic DataGlove, which was released commercially in 1987.[9][10] The DataGlove used fiber optic cables to measure finger bends and was typically paired with a Polhemus magnetic tracking system for positional data. This became an iconic symbol of early VR technology.

In 1989, Mattel released the Nintendo Power Glove, a low-cost consumer version licensed from VPL Research, which used resistive ink flex sensors and ultrasonic emitters for positional tracking. This was the first affordable, mass-market data glove for consumers, popularizing the concept of gestural control in gaming.[11]

Throughout the 1990s, hand tracking advanced with the integration of more sophisticated sensors into VR systems. Researchers at MIT's Media Lab built on the Sayre Glove, incorporating electromagnetic and inertial measurement unit (IMU) sensors for improved positional tracking. These systems, often tethered to workstations, supported rudimentary interactions but were limited by wiring and low resolution. The CyberGlove (early 1990s) by Virtual Technologies used thin foil strain gauges sewn into fabric to measure up to 22 joint angles, becoming a high-precision glove used in research and professional applications.[12]

| Device Name | Year | Key Individuals/Organizations | Core Technology | Historical Significance |

|---|---|---|---|---|

| Sayre Glove | 1977 | Daniel Sandin, Thomas DeFanti (University of Illinois) | Light-based sensors using flexible tubes and photocells to measure light attenuation | First data glove prototype; established finger flexion measurement for computer input |

| Digital Data Entry Glove | 1983 | Gary Grimes (Bell Labs) | Multi-sensor system with flex, tactile, and inertial sensors | Pioneered integration of multiple sensor types for complex gesture recognition |

| VPL DataGlove | 1987 | Thomas Zimmerman, Jaron Lanier (VPL Research) | Fiber optic cables with LEDs and photosensors | First commercially successful data glove; iconic early VR technology |

| Power Glove | 1989 | Mattel (licensed from VPL) | Low-cost resistive ink flex sensors and ultrasonic tracking | First affordable mass-market data glove for consumers |

| CyberGlove | Early 1990s | Virtual Technologies, Inc. | Thin foil strain gauges measuring up to 22 joint angles | High-precision glove for research and professional applications |

2000s: Sensor Fusion and Early Commercialization

The 2000s saw the convergence of hardware and software for multi-modal tracking. External devices like data gloves with fiber-optic sensors (for example Fifth Dimension Technologies' 5DT Glove) combined bend sensors with IMUs to capture 3D hand poses. Software frameworks began processing fused data for virtual hand avatars. However, these remained bulky and controller-dependent, with limited adoption outside research labs.[10]

In the late 1990s and early 2000s, camera-based gesture recognition began to be explored outside of VR, for instance, computer vision researchers worked on interpreting hand signs for sign language or basic gesture control of computers. However, real-time markerless hand tracking in 3D was extremely challenging with the processing power then available.

2010s: Optical Tracking and Controller-Free Era

A pivotal shift occurred in 2010 with the founding of Leap Motion (later Ultraleap) by Michael Buckwald, David Holz, and John Gibb, who aimed to create affordable, high-precision optical hand tracking. In 2010, Microsoft also released the Kinect sensor for Xbox 360, which popularized the use of depth cameras for full-body and hand skeleton tracking in gaming and research.[13]

The Leap Motion Controller, released in 2013, was a small USB device with stereo IR cameras that could track both hands with fine precision (down to approximately 0.01 mm according to specifications) in a limited space above the device.[14][15] This device revolutionized VR by enabling untethered, gesture-based input. Developers and enthusiasts mounted Leap Motion sensors onto VR headsets to experiment with hand input in VR, spurring further interest in the technique. Their flagship controller used two IR cameras and three IR LEDs to track hands at up to 200 Hz over a 60 cm × 60 cm interactive zone.

In 2016, Leap Motion's Orion software update improved robustness against occlusion and lighting variations, boosting adoption in VR development.[16] The company was acquired by Ultrahaptics in 2019, rebranding as Ultraleap and expanding into mid-air haptics.[17]

On the AR side, Microsoft HoloLens (first version, 2016) included simple gesture input such as "air tap" and "bloom" using camera-based hand recognition, though with limited gestures rather than full tracking.

By the late 2010s, inside-out tracking cameras became standard in new VR and AR hardware, and companies began leveraging them for hand tracking. The Oculus Quest, a standalone VR headset released in 2019, initially launched with traditional controller input. At Oculus Connect 6 in September 2019, hand tracking was announced, and in late 2019 an experimental update introduced controller-free hand tracking using its built-in cameras.[18][19] This made the Quest one of the first mainstream VR devices to offer native hand tracking to consumers, showcasing surprisingly robust performance, albeit with some limitations in fast motion and certain angles. This inside-out system used the headset's monochrome cameras and AI for controller-free interactions, marking the mainstream consumer debut.

The Microsoft HoloLens 2 (2019) greatly expanded hand tracking capabilities with fully articulated tracking, allowing users to touch and grasp virtual elements directly. The system tracked 25 points of articulation per hand, demonstrating the benefit of more natural interactions for enterprise AR use cases and eliminating the limited "air tap" gestures of its predecessor.[20][21]

2020s: AI-Driven Refinements and Mainstream Integration

The 2020s brought AI enhancements and broader integration. Meta's MEgATrack (2020), deployed on Quest, used four fisheye monochrome cameras and neural networks for 60 Hz PC tracking and 30 Hz mobile, with low jitter and large working volumes.[22]

Ultraleap's Gemini software update (2021) represented a major overhaul with stronger two-hand interactions and initialization speed for stereo-IR modules and integrated OEM headsets.[23]

Meta continued improving the feature over time with successive updates. The Hands 2.1 update (2022) and Hands 2.2 update (2023) reduced apparent latency and improved fast-motion handling and recovery after tracking loss.[24][25] Subsequent devices like the Meta Quest Pro (2022) and Meta Quest 3 (2023) included more advanced camera systems and neural processing to further refine hand tracking.

In the 2020s, hand tracking became an expected feature in many XR devices. An analysis by SpectreXR noted that the percentage of new VR devices supporting hand tracking jumped from around 21% in 2021 to 46% in 2022, as more manufacturers integrated the technology.[26] At the same time, the cost barrier dropped dramatically, with the average price of hand-tracking-capable VR headsets falling by approximately 93% from 2021 to 2022, making the technology far more accessible.[26]

Another milestone was Apple's introduction of the Apple Vision Pro (released 2024), which relies on hand tracking along with eye tracking as the primary input method for a spatial computer, completely doing away with handheld controllers. Apple's implementation allows users to make micro-gestures like pinching fingers at waist level, tracked by downward-facing cameras, which, combined with eye gaze, lets users control the interface in a very effortless manner.[27][28] This high-profile adoption has been seen as a strong endorsement of hand tracking for mainstream XR interaction.

By 2025, hand tracking is standard in many XR devices, with latencies under 70 ms and applications spanning gaming to medical simulations.

| Year | Development / System | Notes |

|---|---|---|

| 1977 | Sayre Glove | First instrumented glove for virtual interaction using light sensors and photocells |

| 1987 | VPL DataGlove | First commercial data glove measuring finger bend and hand orientation |

| 2010 | Microsoft Kinect | Popularized full-body and hand tracking using IR depth camera for gaming |

| 2013 | Leap Motion Controller | Small USB peripheral with dual infrared cameras for high-precision hand tracking |

| 2016 | HoloLens (1st gen) | AR headset with gesture input (air tap and bloom) |

| 2019 | Oculus/Meta Quest (update) | First mainstream VR device with fully integrated controller-free hand tracking |

| 2019 | HoloLens 2 | Fully articulated hand tracking (25 points per hand) for direct manipulation |

| 2022 | Meta Quest Pro | Improved cameras and dedicated computer vision co-processor for responsive hand tracking |

| 2023 | Apple Vision Pro | Mixed reality device using hand and eye tracking as primary input, eliminating controllers |

| 2023 | Meta Quest 3 | Enhanced hand tracking with improved latency and accuracy |

Technology and Implementation

Most contemporary implementations are markerless (no gloves or markers required), relying on cameras and sensors to detect hand motions rather than requiring wearables. They estimate hand pose directly from one or more cameras using computer vision and neural networks, optionally fused with active depth sensing.[1]

Optical and AI Pipeline

A common approach is using one or more infrared or RGB cameras to visually capture the hands and then employing computer vision algorithms to recognize the hand's pose (the positions of the palm and each finger joint) in 3D space. Advanced machine learning models are often trained to detect keypoints of the hand (such as knuckle and fingertip positions) from the camera images, reconstructing an articulated hand model that updates as the user moves. A typical pipeline includes:

- Detection: Find hands in the camera frame (often with a palm detector)

- Landmark regression: Predict 2D/3D keypoints for wrist and finger joints (commonly 21 landmarks per hand in widely used models)[29]

- Pose / mesh estimation: Fit a kinematic skeleton or hand mesh consistent with human biomechanics for stable interaction and animation

- Temporal smoothing & prediction: Filter jitter and manage short occlusions for responsive feedback

This positional data is then provided to the VR/AR system (often through standard interfaces like OpenXR) so that applications can respond to the user's hand gestures and contacts with virtual objects. Google's MediaPipe Hands, for example, infers 21 3D landmarks per hand from a single RGB frame and runs in real time on mobile-class hardware, illustrating the efficiency of modern approaches.[29]

Inside-Out vs Outside-In Tracking

Hand tracking can be classified by the origin of the tracking sensors:

- Inside-out tracking (egocentric): Cameras or sensors are mounted on the user's own device (headset or glasses), watching the hands from the user's perspective. This is common in standalone VR headsets and AR glasses, as it allows free movement without external setup. Many VR/AR devices use inside-out tracking with on-board cameras to observe the user's hands from the headset itself. This is an optical method where differences in camera pixels over time are analyzed to infer hand movements and gestures. The downside is that hands can only be tracked when in view of the device's sensors, and tracking from a single perspective can lose some accuracy (especially for motions where one hand occludes the other).[30]

- Outside-in tracking (exocentric): External sensors (such as multiple camera towers or depth sensors placed in the environment) track the user's hands (and body) within a designated area or "tracking volume." With sensors capturing from multiple angles, outside-in tracking can achieve very precise and continuous tracking, since the hands are less likely to be occluded from all viewpoints at once. This method was more common in earlier VR setups and research settings (for example, using external motion capture cameras). Outside-in systems are less common in consumer AR/VR today due to setup complexity, but they remain in use for certain professional or room-scale systems requiring high fidelity.[30]

Depth Sensing and Infrared

Some systems augment or replace optical tracking with active depth sensing such as LiDAR or structured light infrared systems. These emit light (laser or IR LED) and measure its reflection to more precisely determine the distance and shape of hands, even in low-light conditions. LiDAR-based hand tracking can capture 3D positions with high precision and is less affected by ambient lighting or distance than pure camera-based methods.[30]

Ultraleap's hand tracking module (for example the Stereo IR 170 sensor) projects IR light and uses two IR cameras to track hands in 3D, allowing for robust tracking under various lighting conditions. This module has been integrated into devices like the Varjo VR-3/XR-3 and certain Pico headsets to provide built-in hand tracking.[31][30] Active depth systems (for example Time-of-Flight or structured light) project or emit IR to recover per-pixel depth, improving robustness in low light and during complex hand poses. Several headsets integrate IR illumination to make hands stand out for monochrome sensors. Some mixed reality devices also include dedicated scene depth sensors that aid perception and interaction.

Optical hand tracking is generally affordable to implement since it can leverage the same camera hardware used for environment tracking or passthrough video. However, its performance can be affected by the cameras' field of view, lighting conditions, and frame rate. If the user's hands move outside the view of the cameras or lighting is poor, tracking quality will suffer. Improvements in computer vision and AI have steadily increased the accuracy and robustness of optical hand tracking, enabling features like two-hand interactions and fine finger gesture detection.[30]

Instrumented Gloves

An alternative approach to camera-based tracking is the use of wired gloves or other wearables that directly capture finger movements. This was the earliest form of hand tracking in VR history. Modern glove-based controllers (often with haptic feedback) still exist, such as the HaptX gloves which combine precise motion tracking with force-feedback to simulate touch, or products like Manus VR gloves. These devices can provide very accurate finger tracking and even capture nuanced motions (like pressure or stretch) that camera systems might miss, but they require the user to wear hardware on their hands, which can be less convenient. Glove-based tracking is often used in enterprise and training applications or research, where maximum precision and feedback is needed.

Development Standards

Modern hand tracking systems expose data through standardized APIs to enable cross-platform development:

- OpenXR: A cross-vendor API from the Khronos Group. Version 1.1 (April 2024) consolidated hand tracking into the core specification, folding common extensions and providing standardized hand-tracking data structures and joint hierarchies across devices, easing portability for developers. The XR_EXT_hand_tracking extension provides 26 joint locations with standardized hierarchy.[4]

- WebXR Hand Input Module (W3C): The Level 1 specification represents the W3C standard for browser-based hand tracking, enabling web applications to access articulated hand pose data (for example joint poses) so web apps can implement hands-first interaction.[5]

Notable Platforms

| Platform | Core Approach | Interaction Highlights | Notes |

|---|---|---|---|

| Meta Quest series (Quest, Quest 2, Quest 3, Quest Pro) | Inside-out mono/IR cameras; AI hand pose; runtime prediction | Direct manipulation, raycast, standardized system pinches; continual "Hands 2.x" latency and robustness improvements | Hand tracking first shipped (experimental) in 2019 on Quest; later updates reduced latency and improved fast motion handling[18][25][24] |

| Microsoft HoloLens 2 | Depth sensing + RGB; fully articulated hand joints | "Direct manipulation" (touch, grab, press) with tactile affordances (fingertip cursor, proximity cues) | Millimeter-scale fingertip errors reported vs. Vicon in validation studies[21][32] |

| Apple Vision Pro | Multi-camera, IR illumination, LiDAR scene sensing; eye-hand fusion | "Look to target, pinch to select", flick to scroll; relaxed, low-effort micro-gestures | Hand + eye as primary input paradigm in visionOS[27] |

| Ultraleap modules (for example Controller 2, Stereo IR) | Stereo IR + LEDs; skeletal model | Robust two-hand support; integrations for Unity/Unreal/OpenXR | Widely embedded in enterprise headsets (for example Varjo XR-3/VR-3)[2][33] |

Interaction Paradigms

Hand tracking opens up a wide range of interaction patterns in XR:

Direct Manipulation

Perhaps the most powerful use of hand tracking is the ability to naturally pick up, move, rotate, and release virtual objects using one's hands. Users reach out and interact with virtual objects as if physically present. This includes:

- Grabbing, rotating, and placing objects

- Pushing buttons, pulling levers, turning knobs

- Tapping and scrolling virtual interfaces

- Touching, poking, pressing, and pinching holograms or virtual controls within arm's reach

This is prominent in Microsoft HoloLens 2 design guidance for natural, tactile mental models.[21] In VR training and simulation, trainees can practice assembling a device by virtually grabbing parts and fitting them together with their hands. This direct manipulation is more immersive than using a laser pointer or controller buttons.

Ray-Based Selection (Indirect Interaction)

For distant objects beyond arm's reach, a virtual ray (from palm, fingertip, or index direction) targets distant UI elements. Users perform a gesture (for example pinch) to activate or select the targeted item. This allows interaction with objects throughout the virtual environment without physical reach limitations.

Multimodal Interaction

Combining hand tracking with other inputs enhances interaction:

- Gaze-and-pinch (Apple Vision Pro): Eye tracking rapidly targets UI elements, while a subtle pinch gesture confirms selection. This is the primary paradigm on Apple Vision Pro, allowing control without holding up hands constantly, a brief pinch at waist level suffices.[27][28]

- Voice and gesture: Verbal commands with hand confirmation

- Hybrid controller/hands: Seamless switching between modalities

Gesture Commands

Beyond direct object manipulation, hand tracking can facilitate recognition of symbolic gestures that act as commands. This is analogous to how touchscreens support multi-touch gestures (pinch to zoom, swipe to scroll). In XR, certain hand poses or movements can trigger actions, for example, making a pinching motion can act as a click or selection, a thumbs-up might trigger an event, or specific sign language gestures could be interpreted as system commands.

Basic system UI controls can be operated by hand gestures. Users can point at menu items, pinch to click on a button, grab and drag virtual windows, or make a fist to select tools. Hand tracking allows for "touchless" interaction with virtual interfaces in a way that feels similar to interacting with physical objects or touchscreens, lowering the learning curve for new users.[34]

Applications

Hand tracking enables a wide array of applications across XR environments:

- System UI & Productivity: Controller-free navigation, window management, and typing/pointing surrogates in spatial desktops. Natural file manipulation, multitasking across virtual screens, and interface control without handheld devices.[27]

- Gaming & Entertainment: Titles such as Hand Physics Lab showcase free-hand puzzles and physics interactions using optical hand tracking on Quest.[35] Games and creative applications use hand interactions, for example a puzzle game might let the player literally reach out and grab puzzle pieces in VR, or users can play virtual piano or create pottery simulations.

- Training & Simulation: Natural hand use improves ecological validity for assembly, maintenance, and surgical rehearsal in enterprise, medical, and industrial contexts.[1] Workers can practice complex procedures in safe virtual environments, developing muscle memory that transfers to real-world tasks.

- Social and Collaborative VR: In multi-user virtual environments, hand tracking enhances communication and embodiment. Subtle hand motions and finger movements can be transmitted to one's avatar, allowing for richer non-verbal communication such as waving, pointing things out to others, or performing shared gestures. This mirrors real life and can make remote collaboration or socializing feel more natural.[34]

- Accessibility & Rehabilitation: Because hand tracking removes the need to hold controllers, it can make VR and AR more accessible to people who may not be able to use standard game controllers. Users with certain physical disabilities or limited dexterity might find hand gestures easier. In addition, the technology has been explored for rehabilitation exercises, for example, stroke patients could do guided therapy in VR using their hands to perform tasks and regain motor function, with the system tracking their movements and providing feedback. Reduces dependence on handheld controllers in shared or constrained environments.[1]

- Healthcare & Medical: AR HUD (heads-up display) interactions in medical contexts allow surgeons to manipulate virtual panels without touching anything physically, maintaining sterile fields. Medical training simulations benefit from realistic hand interactions.

- Sign Language Recognition: An AI-driven hand tracking system can interpret a user's signing into text or speech, bridging communication gaps for deaf and hard-of-hearing individuals.

- Other Professional Uses: Military personnel controlling AR interfaces with gestures, drivers controlling car dashboards with hand movements, operators controlling robots or drones via hand movements. These are areas of ongoing development in human-computer interaction.[31]

Performance and Accuracy

Measured performance varies by device and algorithm, but peer-reviewed evaluations provide useful bounds:

- On Meta Quest 2, a methodological study reported an average fingertip positional error of approximately 1.1 cm and temporal delay of approximately 45 ms for its markerless optical hand tracking, with approximately 9.6° mean finger-joint error under test conditions.[36]

- On Microsoft HoloLens 2, a 2024 study comparing to a Vicon motion-capture reference found millimeter-scale fingertip errors (approximately 2-4 mm) in a tracing task, with good agreement for pinch span and many grasping joint angles.[32]

Real-world performance also depends on lighting, hand pose, occlusions (for example fingers hidden by other fingers), camera field of view, and motion speed. Runtime predictors reduce jitter and tracking loss but cannot eliminate these effects entirely.[1][24]

Advantages

Hand tracking as an input method offers several clear advantages in XR:

- Natural Interaction & Learnability: Using one's own hands is arguably the most intuitive form of interaction, as it leverages skills humans use in the real world. New users can often immediately engage in VR/AR experiences via hand tracking without a long tutorial, since actions like reaching out or pointing feel obvious. This lowers the barrier to entry and can make experiences more engaging and realistic. Uses everyday gestures with low onboarding friction.[34][1]

- Enhanced Immersion: Removing intermediary devices (like controllers or wands) can increase presence. When users see their virtual hands mimicking their every finger wiggle, it reinforces the illusion that they are "inside" the virtual environment. The continuity between real and virtual actions (especially in MR, where users literally see their physical hands interacting with digital objects) can be compelling.

- Expressiveness: Hands allow a wide range of gesture expressions. In contrast to a limited set of controller buttons, hand tracking can capture nuanced movements. This enables richer interactions (such as sculpting a 3D model with complex hand movements) and communication (subtle social gestures, sign language, etc.). Important for social presence, waving, pointing, subtle finger cues enhance non-verbal communication.

- Hygiene & Convenience: Especially in public or shared XR setups, hand tracking can be advantageous since users do not need to touch common surfaces or devices. Touchless interfaces have gained appeal for reducing contact points. Moreover, not having to pick up or hold hardware means quicker setup and freedom to use one's hands spontaneously (for example switching between real objects and virtual interface by just moving hands). No shared controllers required; quicker task switching between physical tools and virtual UI.

Challenges and Limitations

Despite its promise, hand tracking technology comes with several challenges:

Technical Limitations

- Occlusion & Field of View: Self-occluding poses (for example fists, crossed fingers) and hands leaving camera FOV can cause tracking loss. Predictive tracking mitigates but cannot remove this. Ensuring that hand tracking works in all conditions is difficult. Optical systems can struggle with poor lighting, motion blur from fast hand movements, or when the hands leave the camera's field of view (for example reaching behind one's back). Even depth cameras have trouble if the sensors are occluded or if reflective surfaces confuse the measurements.[1][29]

- Latency & Fast Motion: Even 70 ms delay can feel disconnected. Fast motion burdens mobile compute. Continuous updates (for example Quest "Hands 2.x") have narrowed gaps to controllers but not eliminated them. There can also be a slight latency in hand tracking responses due to processing, which, if not minimized, can affect user performance.[25]

- Lighting & Reflectance Sensitivity: Purely optical methods remain sensitive to extreme lighting conditions and reflective surfaces, though IR illumination helps.[1]

- Precision: For certain types of input, hand tracking can be less precise than physical controllers. For example, selecting small UI elements or aiming in a fast-paced game might be harder without the tactile reference of a controller or the stability of a physical device. Fine manipulation is less accurate than physical controllers. Some professional applications or games might still prefer dedicated controllers or tracked tools for fine-grained control.

- Tracking Reliability: Systems may lose track of a hand or mis-identify finger positions, which can be jarring. Developers often need to account for these errors by designing interfaces that tolerate some tracking loss.

User Experience Challenges

- Lack of Physical Haptics: Using just hands in mid-air lacks the physical feedback that we rely on when manipulating real objects. Pressing a virtual button or grabbing a virtual item provides no resistive force to the user's hand, which can feel unnatural and make it hard to judge how firmly you're "holding" something. Mid-air interactions rely on visual/audio feedback unless paired with haptic gloves or contactless haptics. This can be mitigated with additional devices, but those reintroduce hardware. Without feedback, users might also accidentally push through objects or not realize a contact.[1]

- Gorilla Arm Fatigue: Holding one's arms and hands in front of a camera for extended periods can cause arm fatigue. Extended mid-air gestures cause shoulder strain. Interactions need to be designed to minimize sustained arm lift. Some systems like the Apple Vision Pro address this by relying on eye tracking for primary selection (so the user doesn't have to continuously hold up their hand).[28]

- Learning Curve for Abstract Gestures: While basic hand use is natural, specific gesture controls still need to be learned by users. If an application uses several custom hand poses or complex motion gestures for commands, users have to remember them, much like keyboard shortcuts. Abstract gestures for system commands must be memorized. Overloading many gestures can also lead to discovery problems (how does a user know they can perform a certain gesture?). Designers must choose simple, distinct gestures and provide visual hints or tutorials for more complicated ones.[31]

- Environmental Dependencies: Performance varies with lighting conditions and environmental factors.

- Hardware Requirements: High-quality hand tracking may require additional or more advanced hardware (extra cameras, depth sensors, or more powerful processors for the CV algorithms). This can increase device cost or power consumption. However, the trend has been that newer devices incorporate this by default as the cost comes down.

Future Developments

Neural Interfaces

Meta's electromyography (EMG) wristband program detects neural signals controlling hand muscles, enabling zero or negative latency by predicting movements before they occur. Mark Zuckerberg stated in 2024 these wristbands will "ship in the next few years" as primary input for AR glasses.[37] Companies like Ultraleap have raised significant investments to advance hand tracking accuracy and even combine it with mid-air haptics (using ultrasound to provide tactile feedback without touch).[31]

Advanced Haptics

Research directions to address the lack of tactile feedback include:

- Ultrasonic mid-air haptics (Ultraleap)

- Haptic gloves with force feedback (HaptX,Manus VR)

- Electrical muscle stimulation

- Skin-integrated haptic patches

Neural Networks for Better Prediction

There is active research into using neural networks for better prediction of occluded or fast movements, and into augmenting hand tracking with other sensors (for example, using electromyography, reading muscle signals in the forearm, to detect finger movements even before they are visible). All these efforts point toward making hand-based interaction more seamless, reliable, and richly interactive in the coming years.

Market Projections

The AR/VR market is projected to reach $214.82 billion by 2031 at a 31.70% compound annual growth rate (CAGR), with hand tracking as a key growth driver.[38]

See Also

- Gesture recognition

- Computer vision

- Virtual reality

- Augmented reality

- Mixed reality

- Motion capture

- Human-computer interaction

- Eye tracking

- Natural user interface

- Finger tracking

- Brain-computer interface

- Inside-out tracking

- Outside-in tracking

- Haptic technology

- Data glove

- Haptic glove

- OpenXR

- WebXR

- Spatial computing

- Ultraleap

References

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 1.8 Frontiers in Virtual Reality – "Hand Tracking for Immersive Virtual Reality: Opportunities and Challenges" (2021). URL: https://www.frontiersin.org/journals/virtual-reality/articles/10.3389/frvir.2021.728461/full

- ↑ 2.0 2.1 Ultraleap – "Hand Tracking Overview" (docs) + Gemini migration notes. URLs: https://docs.ultraleap.com/hand-tracking/ ; https://docs.ultraleap.com/hand-tracking/gemini-migration.html

- ↑ PubMed Central – "The statistics of natural hand movements". URL: https://pmc.ncbi.nlm.nih.gov/articles/PMC2636901/

- ↑ 4.0 4.1 Khronos Group – "Khronos Releases OpenXR 1.1 to Further Streamline Cross-Platform XR Development" (Press release, Apr 2024). URL: https://www.khronos.org/news/press/khronos-releases-openxr-1.1-to-further-streamline-cross-platform-xr-development

- ↑ 5.0 5.1 W3C – "WebXR Hand Input Module – Level 1" (Spec). URL: https://www.w3.org/TR/webxr-hand-input-1/

- ↑ EVL/UIC – "Sayre Glove (1977)" and Ohio State Pressbooks, "A Critical History of Computer Graphics and Animation: Interaction". URLs: https://www.evl.uic.edu/core.php?mod=4&type=4&indi=87 ; https://ohiostate.pressbooks.pub/graphicshistory/chapter/1-4-interaction/

- ↑ SenseGlove – "The History of VR and VR Haptics" (2023-05-03). URL: https://www.senseglove.com/how-did-we-get-here-the-history-of-vr-and-vr-haptics/

- ↑ Google Patents – "US Patent 4414537A: Data entry glove interface device". URL: https://patents.google.com/patent/US4414537A/en

- ↑ History of Information – "Zimmerman & Lanier Develop the DataGlove" (1987) and related sources on VPL. URL: https://www.historyofinformation.com/detail.php?id=3626

- ↑ 10.0 10.1 VirtualSpeech – "History of VR – Timeline of Events and Tech Development" (Oct 17, 2024). URL: https://virtualspeech.com/blog/history-of-vr

- ↑ Medium – "The Evolution of Gloves as Input Devices" (2019-12-06). URL: https://medium.com/media-reflections-past-present-future/the-evolution-of-gloves-as-input-devices-84efe6ff72bb

- ↑ Avatar Academy – "Developments in VR Hand Tracking" (2023). URL: https://avataracademy.io/developments-in-vr-hand-tracking/

- ↑ CNET – "Xbox Kinect Launch" (2010) and Microsoft resources. URL: https://www.cnet.com/tech/gaming/xbox-kinect-launch/

- ↑ VR & AR Wiki – "Leap Motion VR" (accessed 2025). URL: https://vrarwiki.com/wiki/Leap_Motion

- ↑ Wikipedia – "Leap Motion" (2023-05-30). URL: https://en.wikipedia.org/wiki/Leap_Motion

- ↑ TechNewsWorld – "Leap Motion Unleashes Orion" (2016-02-18). URL: https://www.technewsworld.com/story/leap-motion-unleashes-orion-83129.html

- ↑ The Verge – "Hand-tracking startup Leap Motion reportedly acquired by UltraHaptics" (2019-05-30). URL: https://www.theverge.com/2019/5/30/18645604/leap-motion-vr-hand-tracking-ultrahaptics-acquisition-rumor

- ↑ 18.0 18.1 Meta – "Introducing Hand Tracking on Oculus Quest-Bringing Your Real Hands into VR" (2019). URL: https://www.meta.com/blog/introducing-hand-tracking-on-oculus-quest-bringing-your-real-hands-into-vr/

- ↑ SpectreXR Blog – "Brief History of Hand Tracking in Virtual Reality" (Sept 7, 2022). URL: https://spectrexr.io/blog/news/brief-history-of-hand-tracking-in-virtual-reality

- ↑ Develop3D – "First Look at HoloLens 2" (Dec 20, 2019). URL: https://develop3d.com/reviews/first-look-hololens-2-microsoft-mixed-reality-visualisation-hmd/

- ↑ 21.0 21.1 21.2 Microsoft Learn – "Mixed Reality Design: Direct manipulation" (HoloLens 2 design guidance). URL: https://learn.microsoft.com/en-us/windows/mixed-reality/design/direct-manipulation

- ↑ Meta Research – "MEgATrack: Monochrome Egocentric Articulated Hand-Tracking for Virtual Reality" (2020). URL: https://research.facebook.com/publications/megatrack-monochrome-egocentric-articulated-hand-tracking-for-virtual-reality/

- ↑ Road to VR – "Ultraleap Releases 'Gemini' Hand-tracking Update with Improved Two-handed Interactions" (2021). URL: https://www.roadtovr.com/ultraleap-gemini-v5-hand-tracking-update-windows-release/

- ↑ 24.0 24.1 24.2 Meta for Developers – "Hand Tracking 2.1 improvements (OS v47)" (2022). URL: https://developers.meta.com/horizon/blog/hand-tracking-improvements-v2-1/

- ↑ 25.0 25.1 25.2 Meta for Developers – "All Hands on Deck: Hand Tracking 2.2 latency reductions (v56)" (2023). URL: https://developers.meta.com/horizon/blog/hand-tracking-22-response-time-meta-quest-developers/

- ↑ 26.0 26.1 SpectreXR Blog – "VR Hand Tracking: More Immersive and Accessible Than Ever" (April 3, 2023). URL: https://spectrexr.io/blog/news/vr-hand-tracking-more-immersive-and-accessible-than-ever

- ↑ 27.0 27.1 27.2 27.3 Apple Support – "Use your eyes, hands, and voice to interact with Apple Vision Pro" (visionOS gestures). URL: https://support.apple.com/guide/apple-vision-pro/

- ↑ 28.0 28.1 28.2 UploadVR – "Here's How You Control Apple Vision Pro With Eye Tracking And Hand Gestures" (June 9, 2023). URL: https://www.uploadvr.com/apple-vision-pro-gesture-controls/

- ↑ 29.0 29.1 29.2 MediaPipe – "Hands: On-device, Real-time Hand Tracking" (docs). URL: https://mediapipe.readthedocs.io/en/latest/solutions/hands.html

- ↑ 30.0 30.1 30.2 30.3 30.4 VRX by VR Expert – "What is virtual reality hand tracking?" (Updated Jan 30, 2023). URL: https://vrx.vr-expert.com/what-is-virtual-reality-hand-tracking/

- ↑ 31.0 31.1 31.2 31.3 Sound x Vision – "What is hand tracking?" (n.d.). URL: https://soundxvision.io/what-is-hand-tracking

- ↑ 32.0 32.1 MDPI Virtual Worlds – "Evaluation of HoloLens 2 for Hand Tracking and Kinematic Features Assessment" (2024). URL: https://www.mdpi.com/2813-2084/4/3/31

- ↑ Varjo – "How Hand Tracking in VR Unlocks Enterprise Use Cases (guest post by Ultraleap)" (2021). URL: https://varjo.com/blog/how-hand-tracking-unlocks-enterprise-use-cases-guest-post-by-ultraleap

- ↑ 34.0 34.1 34.2 Varjo Support – "What is hand tracking?" (2025). URL: https://support.varjo.com/hc/en-us/what-is-hand-tracking

- ↑ Road to VR – "'Hand Physics Lab' for Quest Releasing April 1st" (2021). URL: https://www.roadtovr.com/hand-physics-quest-2-release-date-trailer/

- ↑ Behavior Research Methods / PubMed – "A methodological framework to assess the accuracy of virtual reality hand-tracking systems: A case study with the Meta Quest 2" (2023-2024). URL: https://pmc.ncbi.nlm.nih.gov/articles/PMC10830632/

- ↑ UploadVR – "Zuckerberg: Neural Wristband To Ship In 'Next Few Years'" (2024). URL: https://www.uploadvr.com/zuckerberg-neural-wristband-will-ship-in-the-next-few-years/

- ↑ Devpandas – "AR & VR Trends and Applications in 2024: What's Next?" URL: https://www.devpandas.co/blog/ar-vr-trends-2024