Focal surface display: Difference between revisions

→Introduction: Change "currently" to 2017 past tense |

RealEditor (talk | contribs) formatting and link |

||

| (2 intermediate revisions by one other user not shown) | |||

| Line 1: | Line 1: | ||

[[File:Focal surface display prototype.png|thumb|Figure 1. Focus surface display prototype. (Image: Matsuda ''et al''., 2017)]] | [[File:Focal surface display prototype.png|thumb|Figure 1. Focus surface display prototype. (Image: Matsuda ''et al''., 2017)]] | ||

[[File:Focal surface display spatial light modulator.png|thumb|Figure 2. In a focal surface display, a spatial light simulator is placed between the screen and eyepiece of a VR headset. (Image: roadtovr.com)]] | [[File:Focal surface display spatial light modulator.png|thumb|Figure 2. In a focal surface display, a spatial light simulator is placed between the screen and eyepiece of a VR headset. (Image: roadtovr.com)]] | ||

| Line 9: | Line 8: | ||

While modern VR experiences are superior to what they were just a few years ago, the Oculus focal surface display addresses a perceptual limitation of current HMDs: not being able to display scene content at correct focal depths. These HMDs have a fixed-focus accommodation determined by the headset’s eyepiece focal length. Although they give the illusion of depth from the stereo images, the images are essentially flat, at a fixed perceived distance from the face and with a focus selected by the software instead of the eyes. Scene content with a virtual distance from the viewer different than the fixed focal distance of the headset’s screen will lead to a [[vergence-accommodation conflict]] - arising from binocular disparity cues (vergence) in conflict with focus cues (accommodation). The vergence-accommodation conflict prevents the VR content scenes from appearing sharply in focus and may contribute to user’s fatigue and discomfort. <ref name=”2”>Comp Photo Lab. Focal surface displays. Retrieved from http://compphotolab.northwestern.edu/project/focal-surface-displays/</ref> <ref name=”3”>Miller, P. (2017). Oculus Research's focal surface display could make VR much more comfortable for our eyeballs. Retrieved from https://www.theverge.com/circuitbreaker/2017/5/19/15667172/oculus-research-focal-surface-display-vr-comfort-eye-tracking</ref> <ref name=”4”>Coppock, M. (2017). Oculus developing ‘focal surface display’ for better VR image clarity. Retrieved from https://www.digitaltrends.com/computing/oculus-working-on-focal-surface-display-technology-for-improved-visual-clarity</ref> | While modern VR experiences are superior to what they were just a few years ago, the Oculus focal surface display addresses a perceptual limitation of current HMDs: not being able to display scene content at correct focal depths. These HMDs have a fixed-focus accommodation determined by the headset’s eyepiece focal length. Although they give the illusion of depth from the stereo images, the images are essentially flat, at a fixed perceived distance from the face and with a focus selected by the software instead of the eyes. Scene content with a virtual distance from the viewer different than the fixed focal distance of the headset’s screen will lead to a [[vergence-accommodation conflict]] - arising from binocular disparity cues (vergence) in conflict with focus cues (accommodation). The vergence-accommodation conflict prevents the VR content scenes from appearing sharply in focus and may contribute to user’s fatigue and discomfort. <ref name=”2”>Comp Photo Lab. Focal surface displays. Retrieved from http://compphotolab.northwestern.edu/project/focal-surface-displays/</ref> <ref name=”3”>Miller, P. (2017). Oculus Research's focal surface display could make VR much more comfortable for our eyeballs. Retrieved from https://www.theverge.com/circuitbreaker/2017/5/19/15667172/oculus-research-focal-surface-display-vr-comfort-eye-tracking</ref> <ref name=”4”>Coppock, M. (2017). Oculus developing ‘focal surface display’ for better VR image clarity. Retrieved from https://www.digitaltrends.com/computing/oculus-working-on-focal-surface-display-technology-for-improved-visual-clarity</ref> | ||

According to Oculus Research, the focal surface display has a new approach to avoid the vergence-accommodation conflict by changing the way light enters the display using spatial light | According to Oculus Research, the focal surface display has a new approach to avoid the vergence-accommodation conflict by changing the way light enters the display using [[spatial light modulator]]s (Figure 2) to bend the HMD’s focus around 3D objects. This results in an increased depth and maximizes the amount of space represented. <ref name=”1”></ref> | ||

The vergence-accommodation conflict has been a motivation for plentiful of proposals for VR technology that delivers near-correct accommodation cues. The focal surface display technology could help future VR headsets, improving image sharpness and depth of focus, resulting in an experience that approaches how the eyes normally function, thereby reducing discomfort while improving user’s [[immersion]] in the virtual reality. <ref name=”1”></ref> <ref name=”5”>Matsuda, N., Fix, A. and Lanman, D. (2017). Focal surface displays.ACM Transactions on Graphics, 36(4)</ref> <ref name=”6”>Halfacree, G. (2017). Oculus VR outs focal surface display technology. Retrieved from https://www.bit-tech.net/news/tech/peripherals/oculus-vr-focal-surface-display/1/</ref> | The vergence-accommodation conflict has been a motivation for plentiful of proposals for VR technology that delivers near-correct accommodation cues. The focal surface display technology could help future VR headsets, improving image sharpness and depth of focus, resulting in an experience that approaches how the eyes normally function, thereby reducing discomfort while improving user’s [[immersion]] in the virtual reality. <ref name=”1”></ref> <ref name=”5”>Matsuda, N., Fix, A. and Lanman, D. (2017). Focal surface displays.ACM Transactions on Graphics, 36(4)</ref> <ref name=”6”>Halfacree, G. (2017). Oculus VR outs focal surface display technology. Retrieved from https://www.bit-tech.net/news/tech/peripherals/oculus-vr-focal-surface-display/1/</ref> | ||

| Line 20: | Line 19: | ||

==Development and announcement of the focal surface display== | ==Development and announcement of the focal surface display== | ||

The Oculus focal surface display project was a long time in development. According to a research scientist at Oculus Research, “manipulating focus isn’t quite the same as modulating intensity or other more usual tasks in computational displays, and it took us a while to get to the correct mathematical formulation that finally brought everything together. Our overall motivation was to do things the | The Oculus focal surface display project was a long time in development. According to a research scientist at Oculus Research, “manipulating focus isn’t quite the same as modulating intensity or other more usual tasks in computational displays, and it took us a while to get to the correct mathematical formulation that finally brought everything together. Our overall motivation was to do things the "right" way: solid engineering combined with the math and algorithms to back it up. We weren’t going to be happy with something that only worked on paper or a hacked together prototype that didn’t have any rigorous explanation of why it worked.” <ref name=”1”></ref> | ||

On May, 2017, the VR and AR R&D division of Oculus - Oculus Research - announced the new display technology. During the same period, they published a research paper about their focal surface display, authored by Oculus scientists Nathan Matsuda, Alexander Fix, and [[Douglas Lanman]]. The research was also presented at the SIGGRAPH conference in July, 2017. <ref name=”7”>Lang, B. (2017). Oculus Research reveals “groundbreaking” focal surface display. Retrieved from https://www.roadtovr.com/oculus-research-demonstrate-groundbreaking-focal-surface-display/</ref> | On May, 2017, the VR and AR R&D division of Oculus - Oculus Research - announced the new display technology. During the same period, they published a research paper about their focal surface display, authored by Oculus scientists Nathan Matsuda, Alexander Fix, and [[Douglas Lanman]]. The research was also presented at the SIGGRAPH conference in July, 2017. <ref name=”7”>Lang, B. (2017). Oculus Research reveals “groundbreaking” focal surface display. Retrieved from https://www.roadtovr.com/oculus-research-demonstrate-groundbreaking-focal-surface-display/</ref> | ||

| Line 33: | Line 32: | ||

Different HMD architectures have been proposed to solve this problem and depict correct or near-correct retinal blur (Figure 3). The focal surface displays augment regular HMDs with a spatial light modulator that “acts as a dynamic freeform lens, shaping synthesized focal surfaces to conform to the virtual scene geometry.” Furthermore, Oculus Research has introduced “a framework to decompose target focal stacks and depth maps into one or more pairs of piecewise smooth focal surfaces and underlying display images,” building on “recent developments in "optimized blending" to implement a multifocal display that allows the accurate depiction of occluding, semi-transparent, and reflective objects.” <ref name=”5”></ref> | Different HMD architectures have been proposed to solve this problem and depict correct or near-correct retinal blur (Figure 3). The focal surface displays augment regular HMDs with a spatial light modulator that “acts as a dynamic freeform lens, shaping synthesized focal surfaces to conform to the virtual scene geometry.” Furthermore, Oculus Research has introduced “a framework to decompose target focal stacks and depth maps into one or more pairs of piecewise smooth focal surfaces and underlying display images,” building on “recent developments in "optimized blending" to implement a multifocal display that allows the accurate depiction of occluding, semi-transparent, and reflective objects.” <ref name=”5”></ref> | ||

Contrary to multifocal displays with fixed focal surfaces, the phase modulator shapes focal surfaces to conform to the scene geometry. A set of color images are produced and mapped onto a corresponding focal surface (Figure 4), with visual appearance being rendered by “tracing rays from the eye through the optics, and accumulating the color values for each focal surface.” Furthermore, Matsuda ''et al''. (2017) explain that their “algorithm sequentially solves for first the focal surfaces, given the target depth map, and then the color | Contrary to multifocal displays with fixed focal surfaces, the phase modulator shapes focal surfaces to conform to the scene geometry. A set of color images are produced and mapped onto a corresponding focal surface (Figure 4), with visual appearance being rendered by “tracing rays from the eye through the optics, and accumulating the color values for each focal surface.” Furthermore, Matsuda ''et al''. (2017) explain that their “algorithm sequentially solves for first the focal surfaces, given the target depth map, and then the color images, full joint optimization is left for future work. Focal surfaces are adapted by nonlinear least squares optimization, minimizing the distance between the nearest depicted surface and the scene geometry. The color images, paired with each surface, are determined by linear least squares methods.” <ref name=”5”></ref> | ||

The focal surface display research team demonstrated that the technology depicts more accurate retinal blur, with lesser multiplexed images, with high resolution being maintained throughout the user’s accommodative range. <ref name=”5”></ref> | The focal surface display research team demonstrated that the technology depicts more accurate retinal blur, with lesser multiplexed images, with high resolution being maintained throughout the user’s accommodative range. <ref name=”5”></ref> | ||

Latest revision as of 20:13, 2 July 2025

Focal surface display is a technology developed by Oculus Research that improves focus on images generated by a virtual reality (VR) head-mounted display (HMD) by simulating the way the eyes naturally focus at real object of varying depths (Figure 1). [1]

While modern VR experiences are superior to what they were just a few years ago, the Oculus focal surface display addresses a perceptual limitation of current HMDs: not being able to display scene content at correct focal depths. These HMDs have a fixed-focus accommodation determined by the headset’s eyepiece focal length. Although they give the illusion of depth from the stereo images, the images are essentially flat, at a fixed perceived distance from the face and with a focus selected by the software instead of the eyes. Scene content with a virtual distance from the viewer different than the fixed focal distance of the headset’s screen will lead to a vergence-accommodation conflict - arising from binocular disparity cues (vergence) in conflict with focus cues (accommodation). The vergence-accommodation conflict prevents the VR content scenes from appearing sharply in focus and may contribute to user’s fatigue and discomfort. [2] [3] [4]

According to Oculus Research, the focal surface display has a new approach to avoid the vergence-accommodation conflict by changing the way light enters the display using spatial light modulators (Figure 2) to bend the HMD’s focus around 3D objects. This results in an increased depth and maximizes the amount of space represented. [1]

The vergence-accommodation conflict has been a motivation for plentiful of proposals for VR technology that delivers near-correct accommodation cues. The focal surface display technology could help future VR headsets, improving image sharpness and depth of focus, resulting in an experience that approaches how the eyes normally function, thereby reducing discomfort while improving user’s immersion in the virtual reality. [1] [5] [6]

The development of the Oculus focal surface display was an interdisciplinary task, “combining leading hardware engineering, scientific and medical imaging, computer vision research, and state-of-the-art algorithms to focus on next-generation VR.” This technology could even allow people who wear corrective lenses use a VR HMD without glasses. [1]

There had been previous attempts to solve the vergence-accommodation conflict such as using integral imaging techniques to synthesize light fields from scene content or displaying multiple focal planes, but these suffered from such problems as low fidelity accommodation cues, low resolution, and low field of view. The focal surface display is expected to generate high fidelity accommodation cues using off-the-shelf optical components. The spatial light modulator - placed between the display screen and eyepiece - produces variable focus along the display field of view. [2]

As of 2017, there was no known planned commercial release for focal surface display technology. [6]

Development and announcement of the focal surface display

The Oculus focal surface display project was a long time in development. According to a research scientist at Oculus Research, “manipulating focus isn’t quite the same as modulating intensity or other more usual tasks in computational displays, and it took us a while to get to the correct mathematical formulation that finally brought everything together. Our overall motivation was to do things the "right" way: solid engineering combined with the math and algorithms to back it up. We weren’t going to be happy with something that only worked on paper or a hacked together prototype that didn’t have any rigorous explanation of why it worked.” [1]

On May, 2017, the VR and AR R&D division of Oculus - Oculus Research - announced the new display technology. During the same period, they published a research paper about their focal surface display, authored by Oculus scientists Nathan Matsuda, Alexander Fix, and Douglas Lanman. The research was also presented at the SIGGRAPH conference in July, 2017. [7]

Focal Surface display technology

In current VR HMDs, the viewing optics - a magnifying lens - is placed between the user’s eyes and a display screen - a configuration that is mirrored for binocular stereo configurations where one set of optics and one display, or a portion of it, is dedicated to each eye. Matsuda et al. (2017) write that “a binocular HMD depicts stereoscopic imagery such that the user perceives virtual objects with correct retinal disparity, which is the critical stimulus to vergence (the degree to which the eyes are converged or diverged to fixate a point).” [5]

Conventional VR HMDs have, therefore, two main optical components: the eyepiece and a display, which deliver a single, fixed focal surface. With the Oculus focal surface technology, a third optical element is introduced: a phase-modifying spatial light modulator that is placed between the other two optical components. The spatial light modulator functions as a programmable lens with differing focal length, allowing the virtual image to be constructed at different depths. This technology is an expansion on the concept of an adaptive multifocal display. [5]

Current VR HMD technology does not correctly represent retinal blur - a critical stimulus to accommodation. This leads to the vergence-accommodation conflict that is responsible for visual discomforts - such as eye strain and blurred vision - and headaches. Vergence-accommodation as also been associated to perceptual consequences, influencing eye movements and the ability to resolve depth. [5]

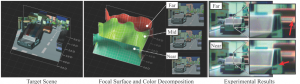

Different HMD architectures have been proposed to solve this problem and depict correct or near-correct retinal blur (Figure 3). The focal surface displays augment regular HMDs with a spatial light modulator that “acts as a dynamic freeform lens, shaping synthesized focal surfaces to conform to the virtual scene geometry.” Furthermore, Oculus Research has introduced “a framework to decompose target focal stacks and depth maps into one or more pairs of piecewise smooth focal surfaces and underlying display images,” building on “recent developments in "optimized blending" to implement a multifocal display that allows the accurate depiction of occluding, semi-transparent, and reflective objects.” [5]

Contrary to multifocal displays with fixed focal surfaces, the phase modulator shapes focal surfaces to conform to the scene geometry. A set of color images are produced and mapped onto a corresponding focal surface (Figure 4), with visual appearance being rendered by “tracing rays from the eye through the optics, and accumulating the color values for each focal surface.” Furthermore, Matsuda et al. (2017) explain that their “algorithm sequentially solves for first the focal surfaces, given the target depth map, and then the color images, full joint optimization is left for future work. Focal surfaces are adapted by nonlinear least squares optimization, minimizing the distance between the nearest depicted surface and the scene geometry. The color images, paired with each surface, are determined by linear least squares methods.” [5]

The focal surface display research team demonstrated that the technology depicts more accurate retinal blur, with lesser multiplexed images, with high resolution being maintained throughout the user’s accommodative range. [5]

Future work for focal surface displays

Oculus focal surface display is still not a perfect solution for the vergence-accommodation conflict. It is instead a middle stage between current VR display technology and a future one with ideal properties to completely solve the problems with vergence and accommodation. [7]

A consumer-ready product is still a long way out. Nevertheless, the research into this technology will benefit the VR an AR (augmented reality) industry, providing a new direction for future research to delve into. Indeed, the focal surface display requires eye-tracking - a technique that is also not a completely solved issue - and is difficult to implement with a wide field of view. There is also the possibility of focal surface displays being applied to AR devices. [5] [7]

While the technology still needs to mature, there is the desire to move beyond fixed-focus headsets which will, inevitably, produce results that will translate into VR products that can be offered to the consumer, in the future. [5]

References

- ↑ 1.0 1.1 1.2 1.3 1.4 Oculus VR (2017). Oculus Research to present focal surface display discovery at SIGGRAPH. Retrieved from https://www.oculus.com/blog/oculus-research-to-present-focal-surface-display-discovery-at-siggraph/

- ↑ 2.0 2.1 Comp Photo Lab. Focal surface displays. Retrieved from http://compphotolab.northwestern.edu/project/focal-surface-displays/

- ↑ Miller, P. (2017). Oculus Research's focal surface display could make VR much more comfortable for our eyeballs. Retrieved from https://www.theverge.com/circuitbreaker/2017/5/19/15667172/oculus-research-focal-surface-display-vr-comfort-eye-tracking

- ↑ Coppock, M. (2017). Oculus developing ‘focal surface display’ for better VR image clarity. Retrieved from https://www.digitaltrends.com/computing/oculus-working-on-focal-surface-display-technology-for-improved-visual-clarity

- ↑ 5.0 5.1 5.2 5.3 5.4 5.5 5.6 5.7 5.8 Matsuda, N., Fix, A. and Lanman, D. (2017). Focal surface displays.ACM Transactions on Graphics, 36(4)

- ↑ 6.0 6.1 Halfacree, G. (2017). Oculus VR outs focal surface display technology. Retrieved from https://www.bit-tech.net/news/tech/peripherals/oculus-vr-focal-surface-display/1/

- ↑ 7.0 7.1 7.2 Lang, B. (2017). Oculus Research reveals “groundbreaking” focal surface display. Retrieved from https://www.roadtovr.com/oculus-research-demonstrate-groundbreaking-focal-surface-display/