Spatial mapping: Difference between revisions

Xinreality (talk | contribs) No edit summary |

Xinreality (talk | contribs) |

||

| Line 75: | Line 75: | ||

The digital model of the environment created through spatial mapping is typically stored and utilized in one of two primary formats. | The digital model of the environment created through spatial mapping is typically stored and utilized in one of two primary formats. | ||

==== Mesh ==== | ==== [[Mesh]] ==== | ||

A mesh represents the geometry of the scene as a continuous surface.<ref name="StereolabsDocsS2"/> It is composed of a set of interconnected, watertight triangles defined by vertices (points in 3D space) and faces (the triangles connecting the vertices).<ref name="StereolabsDocsS2"/><ref name="ViveBlog"/> This representation is highly efficient for computer graphics and is ideal for rendering visualizations of the environment. It is also essential for physics simulations, as the mesh surfaces can be used for collision detection, allowing virtual objects to interact realistically with the mapped world.<ref name="MSDocsUnity">{{cite web |url=https://learn.microsoft.com/en-us/windows/mixed-reality/develop/unity/spatial-mapping-in-unity |title=Spatial mapping in Unity - Mixed Reality |publisher=Microsoft |access-date=2025-10-23}}</ref><ref name="HoloLensYouTube">{{cite web |url=https://www.youtube.com/watch?v=zff2aQ1RaVo |title=HoloLens - What is Spatial Mapping? |publisher=Microsoft |access-date=2025-10-23}}</ref> | A mesh represents the geometry of the scene as a continuous surface.<ref name="StereolabsDocsS2"/> It is composed of a set of interconnected, watertight triangles defined by vertices (points in 3D space) and faces (the triangles connecting the vertices).<ref name="StereolabsDocsS2"/><ref name="ViveBlog"/> This representation is highly efficient for computer graphics and is ideal for rendering visualizations of the environment. It is also essential for physics simulations, as the mesh surfaces can be used for collision detection, allowing virtual objects to interact realistically with the mapped world.<ref name="MSDocsUnity">{{cite web |url=https://learn.microsoft.com/en-us/windows/mixed-reality/develop/unity/spatial-mapping-in-unity |title=Spatial mapping in Unity - Mixed Reality |publisher=Microsoft |access-date=2025-10-23}}</ref><ref name="HoloLensYouTube">{{cite web |url=https://www.youtube.com/watch?v=zff2aQ1RaVo |title=HoloLens - What is Spatial Mapping? |publisher=Microsoft |access-date=2025-10-23}}</ref> | ||

| Line 81: | Line 81: | ||

Meshes can be further processed through filtering to reduce polygon count for better performance and can be textured for enhanced realism.<ref name="StereolabsDocsS2"/> The resulting spatial map data (often a dense mesh of the space) is continually updated as the device observes more of the environment or detects changes in it. Spatial mapping typically runs in the background in real-time on the device, so that the virtual content can be rendered as if anchored to fixed locations in the physical world.<ref name="MicrosoftDoc"/> | Meshes can be further processed through filtering to reduce polygon count for better performance and can be textured for enhanced realism.<ref name="StereolabsDocsS2"/> The resulting spatial map data (often a dense mesh of the space) is continually updated as the device observes more of the environment or detects changes in it. Spatial mapping typically runs in the background in real-time on the device, so that the virtual content can be rendered as if anchored to fixed locations in the physical world.<ref name="MicrosoftDoc"/> | ||

==== Fused Point Cloud ==== | ==== [[Fused Point Cloud]] ==== | ||

A point cloud represents the environment's geometry as a set of discrete 3D points, each with a position and often a color attribute.<ref name="StereolabsDocsS2"/><ref name="ZaubarLexicon"/> A "fused" point cloud is one that has been aggregated and refined over time from multiple sensor readings and camera perspectives. This fusion process creates a denser and more accurate representation than a single snapshot could provide.<ref name="StereolabsDocsS2"/> While point clouds are often an intermediate step before mesh generation, they can also be used directly by certain algorithms, particularly for localization, where the system matches current sensor readings against the stored point cloud to determine its position.<ref name="MetaHelp"/> | A point cloud represents the environment's geometry as a set of discrete 3D points, each with a position and often a color attribute.<ref name="StereolabsDocsS2"/><ref name="ZaubarLexicon"/> A "fused" point cloud is one that has been aggregated and refined over time from multiple sensor readings and camera perspectives. This fusion process creates a denser and more accurate representation than a single snapshot could provide.<ref name="StereolabsDocsS2"/> While point clouds are often an intermediate step before mesh generation, they can also be used directly by certain algorithms, particularly for localization, where the system matches current sensor readings against the stored point cloud to determine its position.<ref name="MetaHelp"/> | ||

Revision as of 22:38, 27 October 2025

Spatial mapping, also known as 3D reconstruction in some contexts, is a core technology that enables a device to create a three-dimensional (3D) digital model of its physical environment in real-time.[1][2] It is a fundamental component of augmented reality (AR), virtual reality (VR), mixed reality (MR), and robotics, allowing systems to perceive, understand, and interact with the physical world.[3][4] By creating a detailed digital map of surfaces, objects, and their spatial relationships, spatial mapping serves as the technological bridge between the digital and physical realms, allowing for the realistic blending of virtual and real worlds.[3]

The process is dynamic and continuous; a device equipped for spatial mapping constantly scans its surroundings with a suite of sensors, building and refining its 3D map over time by incorporating new depth and positional data as it moves through an environment.[1][5] This capability is foundational to the field of extended reality (XR), enabling applications to place digital content accurately, facilitate realistic physical interactions like occlusion and collision, and provide environmental context for immersive experiences.[2][4]

Overview

Definition and Core Function

Spatial mapping is the ability of a system to create a 3D map of its environment, allowing it to understand and interact with the real world.[3] The core function is to capture the geometry and appearance of a physical space and translate it into a machine-readable digital format, typically a mesh or a point cloud.[1] This digital representation allows a device to know the shape, size, and location of floors, walls, furniture, and other objects.[2]

This process is not a one-time scan but a continuous loop of sensing, processing, and refining. As a device like an AR headset moves, it gathers new data, which is integrated with the existing map to improve its accuracy and expand its boundaries.[1][6] This enables applications to achieve a persistent and context-aware understanding of the user's surroundings, which is essential for creating believable and useful mixed reality experiences.[3] Using sensors such as cameras, infrared projectors, or LiDAR scanners, AR devices capture the geometry of real-world surroundings and generate an accurate 3D representation, often as a cloud of points or a triangle mesh.[7]

Role in Extended Reality (XR)

Spatial mapping is a cornerstone technology for all forms of extended reality (XR), a term that encompasses virtual reality (VR), augmented reality (AR), and mixed reality (MR).[8][9] Its role varies depending on the level of immersion:

- In Augmented Reality (AR), spatial mapping allows digital content to be accurately overlaid onto and anchored within the physical world. This enables key features like placing a virtual sofa on a real living room floor or having a digital character realistically appear from behind a physical wall (occlusion).[2][4] Virtual objects can be placed on real-world surfaces in a natural, stable way, with spatial mapping allowing apps to find horizontal or vertical surfaces like floors, walls, and tables, and anchor content to them.[10]

- In Virtual Reality (VR), spatial mapping is used to create detailed 3D models of real-world environments for applications like virtual tours or training simulations.[2] It is also critical for room-scale VR, where it maps the user's physical space to define a safe play area, known as a guardian system, preventing collisions with real-world obstacles.[11]

- In Mixed Reality (MR), spatial mapping is the defining component that allows digital and physical objects to coexist and interact in a context-aware manner. For example, a virtual ball can bounce realistically off a real-world wall, or a digital character can sit on a physical chair. This deep level of interaction is what distinguishes MR from AR.[8][12]

Relationship to 3D Reconstruction and Spatial Computing

While the terms are related and sometimes used interchangeably, they have distinct nuances.

- 3D reconstruction: This term often refers more broadly to the process of creating a detailed and accurate static 3D model of an object or scene from multiple images or scans. While spatial mapping is a form of 3D reconstruction, its use in the XR context emphasizes the real-time and continuous nature of the process for immediate environmental interaction, rather than offline model creation.[4]

- Spatial computing: Spatial mapping is a core component of the broader concept of spatial computing.[13][8] Spatial computing describes the entire technological framework that allows humans and machines to interact with digital information that is seamlessly integrated into a three-dimensional space.[9][14] In this framework, spatial mapping provides the essential environmental "understanding"—the digital ground truth—that the system requires to function.[13]

The evolution of terminology from "3D reconstruction" to "spatial mapping" and its inclusion under the umbrella of "spatial computing" reflects a significant philosophical shift in the industry. It signifies a move away from the goal of simply capturing a digital copy of the world (reconstruction) and toward the more ambitious goal of understanding and interacting with it in real-time (mapping and computing). This distinction is what separates a passive 3D scan from an active, intelligent mixed reality experience where the digital and physical worlds are deeply intertwined.[8][9]

History

The roots of spatial mapping trace back to the development of SLAM in the 1980s, when researchers began addressing the "chicken-and-egg" problem of simultaneous navigation and cartography in unknown environments.[15] Early foundational work by R.C. Smith and Peter Cheeseman in 1986 introduced probabilistic approaches to spatial uncertainty estimation, laying the groundwork for robust mapping algorithms.[15] The genesis occurred at the 1986 IEEE Robotics & Automation Conference in San Francisco, where probabilistic SLAM originated through the seminal work of Smith and Cheeseman, with pioneers Peter Cheeseman, Jim Crowley, Hugh Durrant-Whyte, Raja Chatila, and Oliver Faugeras developing the statistical basis for spatial relationships.[16]

The term "SLAM" was formally coined in 1995 by Hugh Durrant-Whyte and Tim Bailey in their seminal paper "Localization of Autonomous Guided Vehicles" presented at ISRR'95, marking a pivotal moment in robotics that would later influence AR/VR.[15][16] The 1990s saw Durrant-Whyte's group at the University of Sydney demonstrate theoretical solvability of SLAM under infinite data conditions, spurring practical algorithm development.[15] From 1988 to 1994, researchers established the statistical basis for spatial relationships, with early implementations focusing on visual navigation and sonar-based systems. The field gained mainstream adoption between 1999 and 2002, evidenced by SLAM workshop attendance growing from 15 researchers in 2000 to 150 in 2002, culminating in a SLAM summer school at KTH Stockholm.[17]

By the early 2000s, SLAM gained prominence through Sebastian Thrun's autonomous vehicles, STANLEY and JUNIOR, which won the 2005 DARPA Grand Challenge and placed second in the 2007 DARPA Urban Challenge, highlighting its potential for real-world navigation.[15] FastSLAM represented a paradigm shift in 2002 when Montemerlo and colleagues introduced particle filtering to solve the SLAM problem with linear complexity versus the quadratic complexity of previous Extended Kalman Filter approaches, making real-time SLAM feasible on computationally constrained mobile platforms.[17]

Consumer Device Era

In the context of AR and VR, spatial mapping emerged prominently with the advent of consumer-grade MR devices. Microsoft's HoloLens, released in March 2016, introduced spatial mapping as a core feature, leveraging depth cameras to scan and model environments in real-time, featuring four environment understanding cameras for head tracking and one Time-of-Flight depth camera with 0.85 to 3.1 meter range.[10][6] The system provided persistent spatial data storage across applications and device restarts, with continuous mesh updates as the device observed environments.[5]

Microsoft Kinect launched in November 2010 as the first mainstream depth sensor, bringing structured light depth sensing to consumers at $150 with 512×524 depth resolution at 30 fps. The Kinect democratized 3D sensing and enabled KinectFusion, which pioneered real-time Truncated Signed Distance Function fusion for dense 3D reconstruction. Though discontinued in October 2017, Kinect's technology evolved into Azure Kinect (2019) and the depth sensors integrated into HoloLens 2.

Google Project Tango launched in Q1 2014 with the "Peanut" phone as the first mobile SLAM device, requiring specialized hardware including fisheye cameras and depth sensors. The Lenovo Phab 2 Pro in November 2016 became the first commercial smartphone with Tango capabilities. However, the requirement for specialized sensors limited adoption, and Google terminated Tango in December 2017, replacing it with ARCore that worked on standard smartphones.

This was followed by mobile AR frameworks: Apple's ARKit in June 2017 integrated visual-inertial odometry (VIO) for iOS devices, revolutionizing mobile AR by solving monocular Visual-Inertial Odometry without requiring depth sensors, instantly enabling 380 million devices.[18] Google's ARCore in 2017 brought SLAM to Android, using similar depth-from-motion algorithms that compare images from different angles combined with IMU measurements to generate depth maps on standard hardware.[18] Meta's Oculus Quest (2019) incorporated inside-out tracking with SLAM for standalone VR/AR, eliminating external sensors.[19]

The introduction of LiDAR to consumer devices began with iPad Pro in March 2020 and iPhone 12 Pro in October 2020, using Vertical Cavity Surface Emitting Laser technology with direct Time-of-Flight measurement. This enabled ARKit 3.5's Scene Geometry API for instant AR with triangle mesh classification into semantic categories.[20] The 2020s have seen refinements, such as HoloLens 2's Scene Understanding SDK (2019), which builds on spatial mapping for semantic environmental analysis.[10] Advancements in LiDAR (e.g., iPhone 12 Pro, 2020) and AI-driven feature detection have further democratized high-fidelity mapping.[18]

Microsoft launched HoloLens 2 in 2019 with improved Azure Kinect sensors, and Meta Quest 3 arrived in 2023 with full-color passthrough, depth sensing via IR patterned light projector, and sophisticated Scene API with semantic labeling. Apple Vision Pro launched in 2024, representing the current state-of-the-art in spatial computing with advanced eye tracking and hand tracking. Today, spatial mapping is integral to spatial computing, with ongoing research in collaborative SLAM for multi-user experiences.[15]

Core Principles and Technology

The process of spatial mapping involves a continuous pipeline that transforms raw sensor data into a structured, usable digital model of the environment. This process is configurable through several key parameters that balance detail with performance.

The Mapping Process: From Sensors to Digital Model

The creation of a spatial map follows a multi-stage process that runs in real-time on an XR device:[21]

- Data Acquisition: A suite of on-device sensors, including depth cameras, RGB cameras, and inertial measurement units (IMUs), captures raw data about the environment's geometry, appearance, and the device's motion.[2]

- Point Cloud Generation: The raw depth data is processed to generate a point cloud, which is a collection of 3D data points in space that represents the basic structure of the environment.[2]

- Surface Reconstruction: Sophisticated algorithms process the point cloud to create a continuous surface model. This step fills in gaps, reduces noise, and organizes the discrete points into a coherent geometric structure, most commonly a mesh.[1][2]

- Texturing and Refinement: Color and texture information from the RGB cameras is mapped onto the geometric model to create a more photorealistic representation. Simultaneously, the device's position is tracked, and the map is continuously updated and refined with new sensor data as the user moves.[1][5]

As the user moves, the system extracts features (e.g. visual landmarks) from sensor data and incrementally constructs a 3D map consisting of point clouds or meshes of the surroundings. Many modern AR/VR devices fuse inputs from multiple sensors (visible-light cameras, depth sensors, and inertial measurement units) to improve mapping accuracy and tracking stability.[22]

Data Representation

The digital model of the environment created through spatial mapping is typically stored and utilized in one of two primary formats.

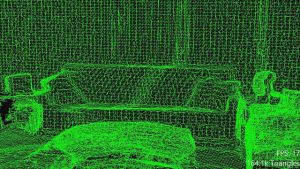

Mesh

A mesh represents the geometry of the scene as a continuous surface.[1] It is composed of a set of interconnected, watertight triangles defined by vertices (points in 3D space) and faces (the triangles connecting the vertices).[1][11] This representation is highly efficient for computer graphics and is ideal for rendering visualizations of the environment. It is also essential for physics simulations, as the mesh surfaces can be used for collision detection, allowing virtual objects to interact realistically with the mapped world.[23][24]

Meshes can be further processed through filtering to reduce polygon count for better performance and can be textured for enhanced realism.[1] The resulting spatial map data (often a dense mesh of the space) is continually updated as the device observes more of the environment or detects changes in it. Spatial mapping typically runs in the background in real-time on the device, so that the virtual content can be rendered as if anchored to fixed locations in the physical world.[10]

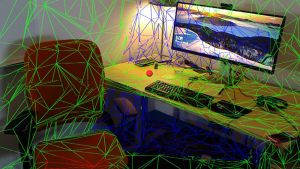

Fused Point Cloud

A point cloud represents the environment's geometry as a set of discrete 3D points, each with a position and often a color attribute.[1][2] A "fused" point cloud is one that has been aggregated and refined over time from multiple sensor readings and camera perspectives. This fusion process creates a denser and more accurate representation than a single snapshot could provide.[1] While point clouds are often an intermediate step before mesh generation, they can also be used directly by certain algorithms, particularly for localization, where the system matches current sensor readings against the stored point cloud to determine its position.[12]

Key Parameters and Configuration

The performance and quality of spatial mapping are governed by several parameters that developers can often adjust. These settings typically involve a direct trade-off between the level of detail captured and the computational resources (processing power, memory) required.[1]

| Parameter | Description | Typical Range/Values | Impact on Performance/Memory | Impact on Geometric Quality |

|---|---|---|---|---|

| Mapping Resolution | Controls the level of detail (granularity) of the spatial map. It is the size of the smallest detectable feature. | 1 cm – 12 cm [1] | High (inverse relationship: lower cm value = higher resolution = higher resource usage) | High (lower cm value = more detailed and accurate geometry) |

| Mapping Range | Controls the maximum distance from the sensor at which depth data is incorporated into the map. | 2 m – 20 m [1] | High (longer range = more data to process = higher resource usage) | Moderate (longer range can map large areas faster but may reduce accuracy at the farthest points) |

| Mesh Filtering | Post-processing to reduce polygon count (decimation) and clean mesh artifacts (e.g., fill holes). | Presets (e.g., Low, Medium, High) [1] | Low (reduces polygon count, leading to significant performance improvement in rendering) | Moderate (aggressive filtering can lead to loss of fine geometric detail) |

| Mesh Texturing | The process of applying camera images to the mesh surface to create a photorealistic model. | On / Off [1] | High (requires storing and processing images, creating a texture map, and using more complex shaders for rendering) | High (dramatically increases visual realism) |

Once a spatial map is constructed, applications can use it to align virtual objects with real surfaces and objects. The map consists of a set of surfaces (often represented as planar patches or a triangle mesh) defined in a world coordinate system. The device's tracking system uses this map to understand its own location (so virtual content remains locked in place) and may persist the map for use across sessions.[10]

Underlying Hardware and Algorithms

Spatial mapping is made possible by a symbiotic relationship between advanced sensor hardware and sophisticated computational algorithms. The type and quality of the sensors dictate the data available, while the algorithms process this data to build and maintain the 3D map.

Essential Sensor Technologies

Modern XR devices rely on sensor fusion, the process of combining data from multiple sensors to achieve a result that is more accurate and robust than could be achieved by any single sensor alone.[25][26] The essential sensor suite includes:

Depth Cameras

These sensors are the primary source of geometric information, measuring the distance to objects and surfaces to form the basis of the 3D map.[2][11] Key types include:

- LiDAR: Emits pulses of laser light and measures the time it takes for the reflections to return. This creates a highly accurate and dense point cloud. LiDAR is effective in various lighting conditions and is known for its precision, making it a key component in devices like the Apple Vision Pro and for autonomous vehicles.[4][27] Apple's LiDAR Scanner uses Vertical Cavity Surface Emitting Laser (VCSEL) technology with direct Time-of-Flight measurement, emitting an 8×8 array of points diffracted into 3×3 grids totaling 576 points in a flash illumination pattern, achieving effective range up to 5 meters with millimeter-level precision at optimal distances.[28]

- Time-of-Flight (ToF): Projects a modulated field of infrared light and measures the phase shift of the light as it reflects off surfaces to calculate distance. ToF sensors are particularly effective for long-range performance and are used in environment mapping.[29][11] Microsoft HoloLens 2 integrates a 1MP Time-of-Flight depth sensor from Azure Kinect with four visible light tracking cameras and dual infrared cameras for eye tracking.[10]

- Structured Light: Projects a known pattern of infrared dots onto the environment. A camera captures how this pattern deforms over the surfaces of objects, and algorithms use this distortion to triangulate depth. Structured light systems, like those used in the Microsoft HoloLens and early Kinect sensors, provide highly detailed depth profiles at close ranges, making them suitable for tasks like room scanning and facial recognition.[29][11]

For example, the HoloLens uses a dedicated time-of-flight depth camera together with four environment tracking cameras and an IMU to robustly scan and mesh the room in real time. Some devices project infrared patterns onto the environment to aid depth perception (as in the Meta Quest 3), while others rely on stereo camera disparity or LiDAR to measure depth.[10]

RGB Cameras

Standard color cameras capture visual information such as color and texture. This data is crucial for texturing the 3D mesh to create a photorealistic model.[2] Additionally, the images from RGB cameras are used by computer vision algorithms to track visual features in the environment, which is the basis of Visual SLAM (vSLAM).[27]

Inertial Measurement Units (IMUs)

An IMU is a critical component for tracking the device's motion and orientation. It typically combines three sensors:[29][26]

- An accelerometer to measure linear acceleration.

- A gyroscope to measure angular velocity (rotation).

- A magnetometer to measure orientation relative to Earth's magnetic field (acting as a compass).

Together, these sensors provide the data needed for odometry (estimating motion over time) and establishing six degrees of freedom (6DoF) tracking, which allows a device to know its position and orientation in 3D space. This data is vital for the localization part of SLAM.[26] Modern systems process IMU data at 100-1000 Hz, providing high-frequency updates that compensate for visual tracking failures and handle motion blur and fast movements better than vision-only systems.[18]

This co-evolution of hardware and software defines distinct eras of spatial mapping. The early robotics era relied on expensive LiDAR. The rise of smartphones, with their ubiquitous and cheap cameras and IMUs, spurred massive innovation in vSLAM algorithms, giving birth to mobile AR platforms like ARKit and ARCore. The current era of advanced XR headsets is defined by the fusion of multiple sensor types, enabling a level of robustness and accuracy previously unattainable in a consumer product.[27][30]

Foundational Algorithms

Simultaneous Localization and Mapping (SLAM)

Simultaneous localization and mapping (SLAM) is the core algorithm that makes spatial mapping possible. It addresses the fundamental computational challenge of constructing a map of an unknown environment while simultaneously tracking the device's own location within that map.[21][25][15] This is often described as a "chicken-and-egg" problem: to build an accurate map, you need to know your precise location at all times, but to know your precise location, you need an accurate map.

SLAM algorithms solve this recursively using probabilistic methods.[25] The system uses sensor data to identify distinct features or landmarks in the environment. It then estimates the device's motion (odometry) between sensor readings and uses these landmarks to correct its position estimate and refine the map. A critical function in SLAM is loop closure, which occurs when the system recognizes a previously mapped area. This recognition allows the algorithm to significantly reduce accumulated errors (drift) and ensure the overall map is globally consistent.[31][25]

Depending on the primary sensors used, SLAM can be categorized into several types:

- Visual SLAM (vSLAM): Uses one or more cameras to track visual features.[27]

- LiDAR SLAM: Uses a LiDAR sensor to build a precise geometric map.[27]

- Multi-Sensor SLAM: Fuses data from various sources (e.g., cameras, IMU, LiDAR) for enhanced robustness and accuracy.[27]

Spatial mapping is typically accomplished via SLAM algorithms, which build a map of the environment in real time while tracking the device's position within it.[32]

Historical Development of SLAM

The theoretical foundations of SLAM originated in the robotics and artificial intelligence research communities in the mid-1980s.[16][17] The development can be broadly divided into two major periods:

- The "Classical Age" (c. 1986–2004) was defined by the establishment of the core probabilistic frameworks for solving the SLAM problem. Key milestones from this era include the application of the Extended Kalman Filter (EKF) in 1999 and the later development of particle filter-based methods, such as FastSLAM.[16][17][33]

- The "Algorithmic-Analysis Age" (c. 2004–2015) shifted focus to analyzing the fundamental mathematical properties of SLAM, such as consistency and convergence. This period saw the development of highly efficient, sparse graph-based optimization techniques (like GraphSLAM) and the emergence of influential open-source libraries such as ORB-SLAM.[16][17] ORB-SLAM emerged in 2015 as a versatile feature-based visual SLAM system, achieving sub-centimeter accuracy indoors through three parallel threads for tracking, local mapping, and loop closing, with the ability to run real-time on CPU without GPU acceleration, extracting 1000-2000 ORB features per frame across 8 scale levels.[34]

This progress was fueled by rapid improvements in processing power and the availability of low-cost sensors, which moved SLAM from a theoretical problem to a practical technology for consumer devices.[27]

Modern SLAM research continues to advance, with a focus on improving robustness in highly dynamic environments, incorporating deep learning for semantic scene understanding, and enabling life-long mapping for persistent autonomous agents.[16][35]

Applications and Use Cases

Spatial mapping is a versatile technology with applications that span numerous industries, from consumer entertainment to critical enterprise operations. Its ability to provide environmental context is transformative across a wide spectrum of scales.

Augmented Reality (AR) and Mixed Reality (MR)

This is the primary domain where spatial mapping enables the defining features of the experience:

- Object Placement and Interaction: Allows virtual objects to be placed on real surfaces, such as a virtual chessboard on a physical table or a digital painting on a wall.[4][12] Constraining holograms or other virtual items to lie on real surfaces makes interactions more intuitive—for example, a digital 3D model can sit on top of a physical desk without "floating" in mid-air. This helps maintain correct scale and position, and reduces user effort in positioning objects in 3D space.[10]

- Occlusion: Creates a sense of depth and realism by allowing real-world objects to block the view of virtual objects. For example, a virtual character can realistically walk behind a physical couch.[4][12] A mapped 3D mesh of the environment lets the renderer determine when parts of a virtual object should be hidden because a real object is in front of them from the user's viewpoint. Proper occlusion cues greatly increase realism, as virtual characters or objects can appear to move behind real walls or furniture and out of sight, or emerge from behind real obstacles.[10]

- Physics-based Interactions: Enables virtual objects to interact with the mapped environment according to the laws of physics, such as a virtual ball bouncing off a real floor and walls.[12][24] With a detailed spatial map, physics engines can treat real surfaces as colliders for virtual objects. This means virtual content can collide with, slide along, or bounce off actual walls, floors, and other structures mapped in the environment. For example, an AR game could allow a virtual ball to roll across your real floor and hit a real chair.[10]

- Environmental Understanding: Provides contextual information for applications like indoor navigation that overlays directions onto the user's view, or architectural visualization that shows a proposed building on an actual site.[4] In augmented reality guides or robotics, a spatial map allows pathfinding and navigation through the physical environment. An AR application could overlay directions that guide a user through a building, knowing where walls and doorways are.[10]

- Persistent Content: When combined with spatial anchoring, spatial maps allow digital content to be locked to specific real-world locations and remain there across sessions. By saving the spatial map (or a condensed form of it) and using stable feature points as anchors, an AR device can recall a previously mapped space and place the same virtual objects in the same real positions each time. This is used in applications that require persistence or multi-user shared AR experiences.[10]

Virtual Reality (VR)

Although VR takes place in a fully digital world, spatial mapping of the user's physical space is crucial for safety and immersion in modern systems.[2]

- It is used to define the guardian system or playspace boundary, which alerts users when they are approaching real-world obstacles like walls or furniture, preventing collisions.[11][12] In VR, spatial mapping of the user's room can establish boundaries for room-scale experiences and keep the user safe from real obstacles by knowing where physical walls and furniture are.[10]

- It can also be used to scan a real-world room to create a virtual replica, which can be used for training simulations, virtual tours, or social VR spaces.[2]

Gaming and Entertainment

Spatial mapping turns a user's physical environment into an interactive game level, creating deeply personal and immersive experiences.[4]

- In MR games, virtual enemies can emerge from behind real furniture, puzzles can require interaction with physical objects, and the game world can be procedurally generated based on the layout of the user's room.[4][36]

- Projection mapping, also known as spatial augmented reality, uses a 3D map of a surface, such as a building facade or a stage set, to project precisely aligned video and graphics. This transforms static objects into dynamic, animated canvases for large-scale entertainment events, art installations, and theatrical performances.[37][38][39]

- Studies have shown that engaging with spatially demanding video games can improve players' spatial cognition, visuospatial reasoning, and attention.[40][41]

Industrial and Enterprise Sectors

Spatial mapping provides powerful tools for improving efficiency, safety, and decision-making in various industries.

- Design and Visualization: Architects, engineers, and designers can overlay full-scale 3D models of new products, machinery, or buildings onto physical spaces to visualize and assess designs in a real-world context before construction or manufacturing begins.[4][8]

- Training and Education: It enables the creation of highly realistic simulations for training on complex or dangerous tasks, such as medical procedures, equipment maintenance, or emergency response, without risk to personnel or equipment.[4][8] Case Western Reserve University reported 85% of medical students rated MR anatomy training as "equivalent" or "better" than in-person classes, while Ford Motor Company achieved 50-70% reduction in training time from 6 months to several weeks using VR and AR technologies.[42]

- Manufacturing and Assembly: Boeing implemented AR-guided assembly using Microsoft HoloLens 2 and Google Glass Enterprise across 15 global facilities for wire harness assembly, achieving 88% first-pass accuracy, 33% increase in wiring speed, 25% reduction in production time, and nearly zero error rates—saving millions per jet through reduced rework.[43]

- Logistics and Supply Chain: Companies use spatial mapping to optimize warehouse layouts, plan efficient delivery routes by analyzing traffic and terrain, and track assets in real-time within a facility.[44][45]

- Construction: The technology is used for site analysis, planning, and risk management by mapping topography and geology. It also allows for continuous comparison of the as-built structure against the digital BIM model to ensure accuracy and quality control, achieving 37% reduction in design conflicts and iterations.[44][46]

Medical and Healthcare

In healthcare, spatial mapping enhances precision, training, and patient care.

- Surgical Planning and Navigation: Surgeons can use 3D maps of a patient's anatomy, generated from medical imaging like CT or MRI scans, and overlay them directly onto the patient's body during a procedure. This "X-ray vision" provides real-time guidance, improving precision in complex operations like tumor resections or orthopedic implant placements, achieving average spatial error of 1±0.1mm.[2][47][48]

- Pain Management and Therapy: VR experiences, which rely on a safely mapped physical space, are used to immerse patients in calming or distracting virtual environments. This has proven effective as a non-pharmacological tool for managing chronic pain and for conducting physical rehabilitation exercises.[47][48]

- Hospital Workflow Optimization: By creating a digital twin of a hospital or clinic, administrators can use spatial mapping to analyze patient and staff movement, identify bottlenecks, optimize room layouts, and improve overall operational efficiency.[47]

Retail and Commerce

Spatial mapping enables virtual try-before-you-buy experiences and spatial commerce:

- IKEA Place launched in 2017 with 98% dimensional accuracy and 2,000+ products, becoming the #2 free ARKit app with millions of downloads. It evolved into IKEA Kreativ in 2022 with LiDAR-enabled room scanning that creates 3D replicas allowing users to virtually remove existing furniture and replace with IKEA products.[49]

- Wayfair built View in Room 3D offering 3D product visualization, while Amazon integrated AR View, and Target, Lowe's, and Overstock deployed similar furniture placement features.[50]

Geospatial Mapping and World-Scale Tracking

The principles of spatial mapping extend to a planetary scale through geospatial mapping. Instead of headset sensors, this field uses data from satellites, aircraft, drones, and ground-based sensors to create comprehensive 3D maps of the Earth.[51][52]

- This large-scale mapping is critical for urban planning, precision agriculture, environmental monitoring (e.g., tracking deforestation or glacial retreat), and disaster management.[51][53][54]

- Projects like Google's AlphaEarth Foundations fuse vast quantities of satellite imagery, radar, and 3D laser mapping data into a unified digital representation of the planet, allowing scientists to track global changes with remarkable precision.[55]

- Pokemon Go achieved unprecedented scale with 800+ million downloads and 600+ million active users, using Visual Positioning System with centimeter-level accuracy. Niantic built a Large Geospatial Model with over 50 million neural networks trained on location data comprising 150+ trillion parameters for planet-scale 3D mapping from pedestrian perspective.[56]

The application of spatial mapping across these different orders of magnitude—from centimeters for object scanning, to meters for room-scale XR, to kilometers for urban planning—reveals a unified theoretical foundation.[55][51]

Implementation in Major XR Platforms

The leading XR platforms have each developed sophisticated and proprietary systems for spatial mapping. While they share common goals, their terminology, APIs, and specific capabilities differ, reflecting distinct approaches to solving the challenges of environmental understanding.

| Feature/Concept | Meta Quest Series | Microsoft HoloLens | Apple Vision Pro | Magic Leap |

|---|---|---|---|---|

| Core Terminology | Scene Data, Mesh Data, Point Cloud Data, Spatial Anchors [12] | Spatial Surfaces, Spatial Understanding, Scene Understanding [23] | World Anchors, Plane Detection, Scene Reconstruction (Mesh) [57] | Spaces, Dense Mesh, Feature Points, Spatial Anchors [58] |

| Primary Data Output | Labeled planes (walls, tables), 3D mesh, point clouds [12] | Triangle meshes representing surfaces [23] | 3D mesh of the environment, detected planes (horizontal/vertical) [57] | 3D triangulated dense mesh, feature point cloud, derived planes [58] |

| Semantic Understanding | Yes (Scene Data automatically labels objects like "table," "couch," "window") [12] | Yes (via Spatial Understanding/Scene Understanding modules which identify floors, walls, etc.) [23] | Primarily geometric (plane detection), with semantic capabilities emerging through higher-level frameworks. | Primarily geometric, with planes derived from the dense mesh [58] |

| Persistence/Sharing | Yes (Spatial Anchors can be persisted across sessions and shared for local multiplayer via "Enhanced Spatial Services") [12][59] | Yes (World Anchors can be saved and shared between devices). | Yes (ARKit's World Anchors can be saved and shared across devices). | Yes (Spatial Anchors can be persisted in a "Space." "Shared Spaces" use AR Cloud for multi-user colocation) [58] |

| Key Developer API/Framework | Scene API, Spatial Anchors API (within Oculus SDK and Unity/Unreal integrations) [60] | Windows Mixed Reality APIs, Mixed Reality Toolkit (MRTK) [23] | ARKit, RealityKit [57] | Magic Leap SDK (C API), Unity/Unreal Engine Integrations [61] |

Microsoft HoloLens

As a pioneering device in commercial mixed reality, the Microsoft HoloLens has a mature spatial mapping system. The device continuously scans its surroundings to generate a world mapping data set, which is organized into discrete chunks called Spatial Surfaces.[5] These surfaces are represented as triangle meshes that are saved on the device and persist across different applications and sessions, creating a unified understanding of the user's environment.[23][5]

HoloLens 1 featured four environment understanding cameras for head tracking and one Time-of-Flight depth camera with 0.85 to 3.1 meter range, generating real-time triangle meshes of physical surfaces with world-locked spatial coordinates. HoloLens 2 launched in 2019 with significant improvements, integrating Azure Kinect's 1MP Time-of-Flight depth sensor and 12MP CMOS RGB sensor alongside four visible light cameras for head tracking and two infrared cameras for eye tracking. The system delivers more than 2× the field of view compared to HoloLens 1 (52° diagonal versus 34°), with 2k 3:2 light engines providing 47 pixels per degree.[10]

Academic research comparing HoloLens meshes to Terrestrial Laser Scanner ground truth shows centimeter-level accuracy, with studies reporting mean Hausdorff distance of a few centimeters and 5.42 cm root mean squared distance between mesh vertices and corresponding model planes. However, research indicates systematic 3-4% overestimation of actual distances and larger deviations near ceilings, transition spaces between rooms, and weakly-textured surfaces.[62]

The Spatial Mapping API provides low-level access with key types including SurfaceObserver, SurfaceChange, SurfaceData, and SurfaceId. Developers specify regions of space as spheres, axis-aligned boxes, oriented boxes, or frustums. The system generates meshes stored in an 8cm cubed voxel grid, with configurable triangles per cubic meter controlling detail level—2000 triangles per cubic meter recommended for balanced performance.[23]

To provide developers with higher-level environmental context, Microsoft offers the Mixed Reality Toolkit (MRTK). The MRTK includes a Spatial Understanding module (and its successor, Scene Understanding) that analyzes the raw geometric mesh to identify and label key architectural elements like floors, walls, and ceilings. It can also identify suitable locations for placing holographic content based on constraints, such as finding an empty space on a wall or a flat surface on a desk.[23] The HoloLens mapping system is optimized for indoor environments and performs best in well-lit spaces, as it can struggle with dark, highly reflective, or transparent surfaces.[6]

Real-world implementations demonstrate substantial return on investment. ThyssenKrupp equipped 24,000 elevator service engineers with HoloLens, achieving 4× reduction in average service call length. Newport News Shipbuilding overlays information onto real-world shipbuilding environments, allowing workers to access information hands-free. Porsche Cars North America revolutionized vehicle maintenance for dealer partners using HoloLens 2 with Dynamics 365 Guides and Remote Assist, improving process compliance and enabling on-the-job learning.[10]

Apple ARKit

Apple ARKit democratized spatial mapping by bringing sophisticated AR capabilities to hundreds of millions of iOS devices without requiring specialized hardware. ARKit 1 launched in 2017 with iOS 11, providing basic horizontal plane detection, Visual Inertial Odometry for tracking, and scene understanding on devices with A9 processors or later.[18]

ARKit 3.5 in iOS 13.4 (March 2020) marked a revolutionary leap with the Scene Geometry API, powered by LiDAR on iPad Pro 4th generation. This first LiDAR-powered spatial mapping provided instant plane detection without scanning, triangle mesh reconstruction with classification into semantic categories (wall, floor, ceiling, table, seat, window, door), enhanced raycasting with scene geometry, and per-pixel depth information through the Depth API. The system could exclude people from reconstructed meshes and provided effective range up to 5 meters.[20]

Apple's LiDAR Scanner uses Vertical Cavity Surface Emitting Laser (VCSEL) technology with direct Time-of-Flight measurement and Single Photon Avalanche Photodiode detection. Research studies document impressive accuracy specifications: maximum range extends to 5 meters with optimal detection for objects with side length greater than 10 cm, absolute accuracy achieves ±1 cm (10 mm) at optimal distances, and point density reaches 7,225 points/m² at 25 cm distance.[28]

The Scene Reconstruction API constructs environments through ARMeshAnchor objects containing mesh geometry for detected physical objects. ARMeshGeometry stores information in array-based format including vertices, normals, faces (triangle indices), and optional per-triangle classification. The system assembles environments through construction of smaller submeshes saved in ARMeshAnchor objects with dynamic updates over time to reflect real-world changes.[20]

RoomPlan framework launched with iOS 16 in June 2022, providing Swift API powered by ARKit using camera and LiDAR Scanner to create 3D floor plans with parametric models. The framework detects walls, windows, doors, openings, fireplaces, and furniture including sofas, tables, chairs, beds, and cabinets through sophisticated machine learning algorithms. Best practices recommend maximum room size of 30 ft × 30 ft (9m × 9m), minimum lighting of 50 lux, and scanning duration under 5 minutes to avoid thermal issues.[20]

Google ARCore

Google ARCore launched in 2017 as the company's platform-agnostic augmented reality SDK, providing cross-platform APIs for Android, iOS, Unity, and Web after discontinuing the hardware-dependent Project Tango. ARCore achieves spatial understanding without specialized sensors through depth-from-motion algorithms that compare multiple device images from different angles, combining visual information with IMU measurements running at 1000 Hz. The system performs motion tracking at 60 fps using Simultaneous Localization and Mapping with visual and inertial data fusion.[63]

The Depth API public launch in ARCore 1.18 (June 2020) brought occlusion capabilities to hundreds of millions of compatible Android devices. The depth-from-motion algorithm creates depth images using RGB camera and device movement, selectively using machine learning to increase depth processing even with minimal motion. Depth images store 16-bit unsigned integers per pixel representing distance from camera to environment, with depth range of 0 to 65 meters and most accurate results from 0.5 to 5 meters from real-world scenes.[28]

The Depth API enables multiple capabilities: occlusion where virtual objects accurately appear behind real-world objects, scene transformation with virtual effects interacting with surfaces, distance and depth of field effects, user interactions enabling collision and physics with real-world objects, improved hit-tests working on non-planar and low-texture areas, and 3D reconstruction converting depth to point clouds for geometry analysis.[63]

The Geospatial API launched in May 2022, providing world-scale AR across 100+ countries covered by Google Street View. The Visual Positioning System is built from 15+ years of Google Street View images using deep neural networks to identify recognizable image features, computing a 3D point cloud with trillions of points across the global environment. The system processes pixels in less than 1 second to determine device position and orientation, returning latitude, longitude, and altitude with sub-meter accuracy versus 5-10 meter typical GPS accuracy.[63]

Meta Quest

Meta Quest evolved from pure virtual reality to sophisticated mixed reality through progressive spatial mapping capabilities. Quest 3 launched in 2023 with revolutionary spatial capabilities, featuring Snapdragon XR2 Gen 2 providing 2× GPU performance versus Quest 2, dual LCD displays at 2064×2208 per eye, and sophisticated sensor array including two 4MP RGB color cameras for full-color passthrough, four hybrid monochrome/IR cameras for tracking, and one IR patterned light emitter serving as depth sensor.[12]

The Scene API enables semantic understanding of physical environments through system-generated scene models with semantic labels including floor, ceiling, walls, desk, couch, table, window, and lamp. The API provides bounded 2D entities defining surfaces like walls and floors with 2D boundaries and bounding boxes, bounded 3D entities for objects like furniture with 3D bounding boxes, and room layout with automatic room structure detection and classification.[12]

Quest 3's Smart Guardian uses automatic room scanning with AI-powered boundary suggestions based on room geometry, progressive room scanning without manual boundary drawing, integrated depth sensor for enhanced spatial understanding, and automatic detection of walls, ceiling, and floor forming the room box. Space Sense displays outlines of people, pets, and objects entering play area up to 9 feet (2.7 meters) away.[12]

The Mesh API provides real-time geometric representation of environment as single triangle-based mesh capturing walls, ceilings, and floors automatically during Space Setup. This enables accurate physics simulations and collision detection, supporting bouncing virtual balls, lasers, and projectiles off physical surfaces with AI navigation and pathfinding for virtual characters. Average vertices per room reach 30,000 for detailed reconstruction with maximum observed of 63,000 vertices for complex rooms.[12]

The Depth API provides real-time depth maps from user's point of view using IR patterned light emitter (line projector) combined with dual front-facing IR cameras. Disparity-based depth calculation from stereo tracking cameras achieves effective range up to approximately 5 meters with per-frame depth information, enabling dynamic occlusion hiding virtual objects behind physical objects in real-time.[12]

Recent software updates have significantly expanded capabilities, introducing multi-room support that allows a user to scan and persist a map of up to 15 rooms and move freely between them without losing tracking or anchor positions.[59]

Magic Leap

Magic Leap devices build and maintain persistent 3D maps of the environment called Spaces.[58][61] A Space is a comprehensive digital representation composed of feature points (unique visual points in the environment used for precise location and orientation determination), dense mesh (3D triangulated geometry of room surfaces), and spatial anchors (persistent coordinate systems for anchoring virtual content).[58]

Magic Leap 2 emerged as HoloLens's primary enterprise competitor with 70° diagonal field of view (largest in its class versus HoloLens 2's 52°), weighing just 260 grams for the headset with separate Compute Pack versus HoloLens 2's all-in-one 566 grams. The device features 16GB RAM, 256GB storage, dual-CPU configuration with high-performance GPU, and Dynamic Dimming technology.[58]

Spatial capabilities include on-device spatial mapping storing up to 5 local spaces of 250m² each, unlimited shared spaces via AR Cloud (deprecated January 2025), and ad-hoc localization with background processing. The device uses its world cameras and depth sensor to construct the dense mesh, performing best with slow, deliberate user movement in well-lit, textured environments.[61]

The July 2022 partnership with NavVis combines Magic Leap's spatial computing platform with NavVis mobile mapping systems and IVION Enterprise spatial data platform, enabling pre-mapping and deployment of digital twins in warehouses, retail stores, offices, and factories covering up to millions of square feet with photorealistic accuracy. Customers including BMW, Volkswagen, Siemens, and Audi leverage this integration.[64]

Challenges and Limitations

Despite significant advancements, spatial mapping technology faces several inherent challenges that stem from the complexity and unpredictability of the physical world. These limitations impact the accuracy, robustness, and practicality of XR applications.

Dynamic Environments

Most spatial mapping systems are designed with the assumption that the environment is largely static. However, real-world spaces are dynamic. Moving objects such as people walking through a room, pets, or even something as simple as a door opening can introduce errors into the spatial map.[65] These changes can lead to artifacts like "hallucinations" (surfaces appearing in the map where none exist) or holes in the mesh where an object used to be.[6] The real-time detection, tracking, and segmentation of dynamic objects is a major computational challenge and an active area of research in computer vision and robotics.[31][65]

Sensor and Algorithmic Constraints

The performance of spatial mapping is fundamentally limited by the capabilities of its hardware and software.

- Problematic Surfaces: Onboard sensors often struggle with certain types of materials. Transparent surfaces like glass, highly reflective surfaces like mirrors, and textureless or dark, light-absorbing surfaces can fail to return usable data to depth sensors, resulting in gaps or inaccuracies in the map.[5][6][66]

- Drift: Tracking systems that rely on odometry (estimating motion from sensor data) are susceptible to small, accumulating errors over time. This phenomenon, known as drift, can cause the digital map to become misaligned with the real world. While algorithms use techniques like loop closure to correct for drift, it can still be a significant problem in large, feature-poor environments (like a long, white hallway).[31][25]

- Scale and Boundaries: The way spatial data is aggregated and defined can influence analytical results, a concept known in geography as the Modifiable Areal Unit Problem (MAUP). This problem highlights that statistical outcomes can change based on the shape and scale of the zones used for analysis, which has parallels in how room-scale maps are chunked and interpreted.[67][68]

Computational and Power Requirements

Real-time spatial mapping is a computationally demanding task. It requires significant processing power to continuously fuse data from multiple sensors, run complex SLAM algorithms, and generate and render the 3D mesh.[25][69] On untethered, battery-powered devices like XR headsets, this creates a constant trade-off between the quality of the mapping, the performance of the application, and the device's battery life.[26]

Many systems currently perform spatial mapping as an initial scan (requiring the user to pan the device around for some time); if the real environment changes after this scan (furniture moved, etc.), the virtual content will no longer align perfectly until the map is updated. Continuous real-time mapping (as on high-end devices) mitigates this but at a cost of higher processing and power use.[10]

Data Privacy and Security

By its very nature, spatial mapping captures a detailed 3D blueprint of a user's private spaces, including room layouts, furniture, and potentially sensitive personal items.[13][26] This raises significant privacy and security concerns about how this intimate data is stored, processed, and potentially shared with third-party applications or cloud services.[13]

In response, platform providers like Meta and Magic Leap have implemented explicit permission systems, requiring users to grant individual applications access to their spatial data before it can be used.[12][58] Device makers emphasize that spatial maps are generally processed locally on the device (often not transmitted off-device) and that they do not encode high-resolution color imagery of the scene, only abstract geometry. Spatial maps typically do not include identifiable details like text on documents or people's faces—moving objects and people are usually omitted during scanning.[58]

These technical challenges highlight an important reality for XR development: the digital map will always be an imperfect approximation of the physical world. The pursuit of a flawless, error-free spatial map is asymptotic. Therefore, successful XR application design depends not only on improvements in mapping technology but also on developing software that is resilient to these imperfections.

Future Directions

The field of spatial mapping is rapidly evolving, driven by advancements in artificial intelligence, cloud infrastructure, and the growing need for interoperability in the emerging spatial computing ecosystem.

Semantic Spatial Understanding

The next major frontier for spatial mapping is the shift from purely geometric understanding (knowing where a surface is) to semantic understanding (knowing what a surface is).[35][70] This involves leveraging AI and machine learning algorithms to analyze the map data and automatically identify, classify, and label objects and architectural elements in real-time—for example, recognizing a surface as a "couch," an opening as a "door," or an object as a "chair."[12][35]

This capability, already emerging in platforms like Meta Quest's Scene API, will enable a new generation of intelligent and context-aware XR experiences. Virtual characters could realistically interact with the environment (e.g., sitting on a recognized couch), applications could automatically adapt their UI to the user's specific room layout, and digital assistants could understand commands related to physical objects ("place the virtual screen on that wall").[70]

Neural Rendering and AI-Powered Mapping

Neural Radiance Fields (NeRF) revolutionized 3D scene representation when introduced by UC Berkeley researchers in March 2020, representing continuous volumetric scene function producing photorealistic novel views through neural network. Key variants address limitations: Instant-NGP (2022) reduces training from hours to seconds through multi-resolution hash encoding, while Mip-NeRF (2021) adds anti-aliasing for better rendering at multiple scales.[71]

3D Gaussian Splatting emerged in August 2023 as breakthrough achieving real-time performance at 30+ fps for 1080p rendering—100 to 1000 times faster than NeRF. The technique represents scenes using millions of 3D Gaussians in explicit representation versus NeRF's implicit neural encoding, enabling real-time rendering crucial for interactive AR/VR applications.[72]

The Role of Edge Computing and the Cloud

To overcome the processing and power limitations of mobile XR devices, computationally intensive spatial mapping tasks will increasingly be offloaded to the network edge or the cloud.[73] In this split-compute model, a lightweight headset would be responsible for capturing raw sensor data and sending it to a powerful nearby edge server. The server would then perform the heavy lifting—running SLAM algorithms, generating the mesh, and performing semantic analysis—and stream the resulting map data back to the device with extremely low latency.[73]

Furthermore, the cloud will play a crucial role in creating and hosting large-scale, persistent spatial maps, often referred to as digital twins or the AR Cloud. By aggregating and merging map data from many users, it will be possible to build and maintain a shared, persistent digital replica of real-world locations, enabling multi-user experiences at an unprecedented scale.[58][73]

Standardization and Interoperability

The current spatial mapping landscape is fragmented, with each major platform (Meta, Apple, Microsoft, etc.) using its own proprietary data formats and APIs.[73] This lack of interoperability is a significant barrier to creating a unified metaverse or a truly open AR ecosystem where experiences can be shared seamlessly across different devices.

For the field to mature, industry-wide standards for spatial mapping data will be necessary. Initiatives like OpenXR provide a crucial first step by standardizing the API for device interaction, but future standards will need to address the format and exchange of the spatial map data itself—including point clouds, meshes, and semantic labels. This will be essential to ensure that a map created by one device can be understood and used by another, fostering a more collaborative and interconnected spatial web.[73]

Many AR SDKs provide APIs to access spatial mapping data. For instance, Apple's ARKit can generate a mesh of the environment on devices with a LiDAR Scanner (exposing it through `ARMeshAnchor` objects), and Google's ARCore provides a Depth API that yields per-pixel depth maps which can be converted into spatial meshes. These frameworks use the device's camera(s) and sensors to detect real-world surfaces so developers can place virtual content convincingly in the scene.[20][63]

Market Growth and Adoption

Market projections indicate explosive growth. The spatial computing market reached $93.25 billion in 2024 with projection to $512 billion by 2032 representing 23.7% compound annual growth rate. AR market expects to reach $635.67 billion by 2033. VR market projects over $200 billion by 2030. Mobile AR users totaled 1.03 billion in 2024, projected to reach 1.19 billion by 2028.[74]

Enterprise adoption accelerates across industries, with manufacturing representing approximately 25% of XR investments, healthcare and medical applications accounting for roughly 18%, and architecture and construction comprising about 15%. Surveys indicate 75% of implementing companies report 10%+ efficiency improvements, while 91% plan VR/AR implementation in 2025.[75]

See Also

- Augmented reality

- Computer Vision

- Depth perception

- Extended reality

- Geospatial mapping

- LiDAR

- Mesh

- Mixed reality

- Point Cloud

- Projection mapping

- Simultaneous localization and mapping

- Spatial computing

- Virtual reality

References

- ↑ 1.00 1.01 1.02 1.03 1.04 1.05 1.06 1.07 1.08 1.09 1.10 1.11 1.12 1.13 1.14 1.15 "Spatial Mapping Overview". Stereolabs. https://www.stereolabs.com/docs/spatial-mapping.

- ↑ 2.00 2.01 2.02 2.03 2.04 2.05 2.06 2.07 2.08 2.09 2.10 2.11 2.12 2.13 "Spatial Mapping". Zaubar. https://about.zaubar.com/en/xr-ai-lexicon/spatial-mapping.

- ↑ 3.0 3.1 3.2 3.3 "Spatial Mapping Overview". Stereolabs. https://www.stereolabs.com/docs/spatial-mapping.

- ↑ 4.00 4.01 4.02 4.03 4.04 4.05 4.06 4.07 4.08 4.09 4.10 4.11 "Spatial mapping and 3D reconstruction in augmented reality". Educative. https://www.educative.io/answers/spatial-mapping-and-3d-reconstruction-in-augmented-reality. Cite error: Invalid

<ref>tag; name "EducativeIO" defined multiple times with different content - ↑ 5.0 5.1 5.2 5.3 5.4 5.5 "Spatial Mapping concepts". Unity. https://docs.unity3d.com/2019.1/Documentation/Manual/SpatialMapping.html.

- ↑ 6.0 6.1 6.2 6.3 6.4 "Map your space with HoloLens". Microsoft. https://learn.microsoft.com/en-us/hololens/hololens-spaces.

- ↑ "Spatial Mapping (Augmented Reality Glossary)". AR.rocks. 2025-08-20. https://www.ar.rocks/glossary/spatial-mapping.

- ↑ 8.0 8.1 8.2 8.3 8.4 8.5 "Understanding Spatial Computing: How It's Changing Technology for the Better". ArborXR. https://arborxr.com/blog/understanding-spatial-computing-how-its-changing-technology-for-the-better.

- ↑ 9.0 9.1 9.2 "Immersive Technologies: Explaining AR, VR, XR, MR, and Spatial Computing". Ocavu. https://www.ocavu.com/blog/immersive-technologies-explaining-ar-vr-xr-mr-and-spatial-computing.

- ↑ 10.00 10.01 10.02 10.03 10.04 10.05 10.06 10.07 10.08 10.09 10.10 10.11 10.12 10.13 10.14 10.15 "Spatial mapping - Mixed Reality". Microsoft Learn. 2023-02-01. https://learn.microsoft.com/en-us/windows/mixed-reality/design/spatial-mapping.

- ↑ 11.0 11.1 11.2 11.3 11.4 11.5 "How depth sensors revolutionize virtual and mixed reality". HTC Vive. https://blog.vive.com/us/how-depth-sensors-revolutionize-virtual-and-mixed-reality/.

- ↑ 12.00 12.01 12.02 12.03 12.04 12.05 12.06 12.07 12.08 12.09 12.10 12.11 12.12 12.13 12.14 12.15 12.16 "Share spatial data on Meta Quest". Meta. https://www.meta.com/help/quest/625635239532590/.

- ↑ 13.0 13.1 13.2 13.3 "Spatial Computing for Immersive Experiences". Meegle. https://www.meegle.com/en_us/topics/spatial-computing/spatial-computing-for-immersive-experiences.

- ↑ "Apple Vision Pro vs. Samsung Galaxy XR: A new mixed-reality showdown". The Washington Post. https://www.washingtonpost.com/technology/2025/10/22/galaxy-xr-vision-pro-m5-hands-on/.

- ↑ 15.0 15.1 15.2 15.3 15.4 15.5 15.6 "Simultaneous localization and mapping". Wikipedia. 2025-10-27. https://en.wikipedia.org/wiki/Simultaneous_localization_and_mapping.

- ↑ 16.0 16.1 16.2 16.3 16.4 16.5 "Research on SLAM algorithm based on improved ORB-SLAM3 in dynamic environment". AIP Conference Proceedings. https://pubs.aip.org/aip/acp/article-pdf/doi/10.1063/5.0173865/18212106/020004_1_5.0173865.pdf.

- ↑ 17.0 17.1 17.2 17.3 17.4 "Simultaneous Localization and Mapping: A Survey of Current Trends in Autonomous Driving". IEEE Transactions on Intelligent Vehicles. https://pages.cs.wisc.edu/~jphanna/teaching/25spring_cs639/resources/SLAM-past-present-future.pdf.

- ↑ 18.0 18.1 18.2 18.3 18.4 "Basics of AR: SLAM – Simultaneous Localization and Mapping". Andreas Jakl. 2018-08-14. https://www.andreasjakl.com/basics-of-ar-slam-simultaneous-localization-and-mapping/.

- ↑ "Spatial Anchors Overview". Meta for Developers. 2024-05-15. https://developers.meta.com/horizon/documentation/unity/unity-spatial-anchors-overview/.

- ↑ 20.0 20.1 20.2 20.3 20.4 "ARKit Scene Reconstruction". Apple Developer Documentation. 2020. https://developer.apple.com/documentation/arkit/arkit_scene_reconstruction.

- ↑ 21.0 21.1 "Augmented Reality Mapping Systems: A Comprehensive Guide". Proven Reality. https://provenreality.com/augmented-reality-mapping-systems/.

- ↑ "Augmented Reality Technology: An Overview". R2U Blog. 2020-09. https://r2u.io/en/augmented-reality-technology-an-overview/.

- ↑ 23.0 23.1 23.2 23.3 23.4 23.5 23.6 23.7 "Spatial mapping in Unity - Mixed Reality". Microsoft. https://learn.microsoft.com/en-us/windows/mixed-reality/develop/unity/spatial-mapping-in-unity.

- ↑ 24.0 24.1 "HoloLens - What is Spatial Mapping?". Microsoft. https://www.youtube.com/watch?v=zff2aQ1RaVo.

- ↑ 25.0 25.1 25.2 25.3 25.4 25.5 "SLAM - Simultaneous localization and mapping". SBG Systems. https://www.sbg-systems.com/glossary/slam-simultaneous-localization-and-mapping/.

- ↑ 26.0 26.1 26.2 26.3 26.4 "What sensors (e.g., accelerometer, gyroscope) are essential in AR devices?". Milvus. https://milvus.io/ai-quick-reference/what-sensors-eg-accelerometer-gyroscope-are-essential-in-ar-devices.

- ↑ 27.0 27.1 27.2 27.3 27.4 27.5 27.6 "What Is SLAM (Simultaneous Localization and Mapping)?". MathWorks. https://www.mathworks.com/discovery/slam.html.

- ↑ 28.0 28.1 28.2 "Google ARCore Depth API Now Available". Road to VR. 2020-06-25. https://www.roadtovr.com/google-depth-api-arcore-augmented-reality/.

- ↑ 29.0 29.1 29.2 "Sensors for AR/VR". Pressbooks. https://pressbooks.pub/augmentedrealitymarketing/chapter/sensors-for-arvr/.

- ↑ "What Technology Is Used For AR Development?". VROwl. https://vrowl.io/blog/what-technology-is-used-for-ar-development/.

- ↑ 31.0 31.1 31.2 "How do robots use SLAM (Simultaneous Localization and Mapping) algorithms for navigation?". Milvus. https://milvus.io/ai-quick-reference/how-do-robots-use-slam-simultaneous-localization-and-mapping-algorithms-for-navigation.

- ↑ "Spatial Mapping: Empowering the Future of AR". Adeia. 2022-03-02. https://adeia.com/blog/spatial-mapping-empowering-the-future-of-ar.

- ↑ "Past, Present, and Future of Simultaneous Localization and Mapping: Toward the Robust-Perception Age". IEEE Transactions on Robotics. https://rpg.ifi.uzh.ch/docs/TRO16_cadena.pdf.

- ↑ "ORB-SLAM Documentation". University of Washington. https://pages.cs.wisc.edu/~jphanna/teaching/25spring_cs639/.

- ↑ 35.0 35.1 35.2 "From Code to Context: How Spatial AI is Powering the Next Leap in Manufacturing". Robotics Tomorrow. https://www.roboticstomorrow.com/story/2025/10/from-code-to-context-how-spatial-ai-is-powering-the-next-leap-in-manufacturing/25666/.

- ↑ "Spatial Anchors Overview". Meta. https://developers.meta.com/horizon/documentation/unity/unity-spatial-anchors-overview/.

- ↑ "Projection Mapping: Powering Immersive Visuals with Precision and Flexibility". Scalable Display. https://www.scalabledisplay.com/projection-mapping-powering-immersive-visuals/.

- ↑ "What is 3D Projection Mapping and How Does it Work?". ScaleUp Spaces. https://scaleupspaces.com/blogs/what-is-3d-projection-mapping-and-how-does-it-work.

- ↑ "Projection Mapping: what it is and the simplest way to do it!". HeavyM. https://www.heavym.net/what-projection-mapping-is-and-how-to-do-it/.

- ↑ "The Impact of Video Games on Self-Reported Spatial Abilities: A Cross-Sectional Study". Cureus. https://pmc.ncbi.nlm.nih.gov/articles/PMC11674921/.

- ↑ "Enriching the Action Video Game Experience: The Effects of Gaming on the Spatial Distribution of Attention". Journal of Experimental Psychology: Human Perception and Performance. https://pmc.ncbi.nlm.nih.gov/articles/PMC2896828/.

- ↑ "Ford Virtual Reality Training". Ford Motor Company. https://www.ford.com/technology/virtual-reality/.

- ↑ "Boeing Augmented Reality". Boeing. https://www.boeing.com/features/innovation-quarterly/aug2018/feature-technical-augmented-reality.page.

- ↑ 44.0 44.1 "What Is Spatial Mapping and How Is It Used?". eSpatial. https://www.espatial.com/blog/spatial-mapping.

- ↑ "GIS Applications in Different Industries". Milsoft. https://www.milsoft.com/newsroom/gis-applications-different-industries/.

- ↑ "Which Industries Should Consider Adding More Geospatial Technology to Their Workflows?". T-Kartor. https://www.t-kartor.com/blog/which-industries-should-consider-adding-more-geospatial-technology-to-their-workflows.

- ↑ 47.0 47.1 47.2 "Spatial Computing in Personalized Healthcare". Meegle. https://www.meegle.com/en_us/topics/spatial-computing/spatial-computing-in-personalized-healthcare.

- ↑ 48.0 48.1 "AR/VR's Potential to Revolutionize Health Care". ITIF. https://itif.org/publications/2025/06/02/arvrs-potential-in-health-care/.

- ↑ "IKEA Kreativ". IKEA. https://www.ikea.com/us/en/customer-service/mobile-apps/say-hej-to-ikea-kreativ-pub10a5f520.

- ↑ "How retailers are using augmented reality". Digital Commerce 360. https://www.digitalcommerce360.com/2022/05/18/how-retailers-are-using-augmented-reality/.

- ↑ 51.0 51.1 51.2 "What is Geospatial Mapping and How does it Work?". Matrack Inc.. https://matrackinc.com/geospatial-mapping/.

- ↑ "What is Geospatial Mapping and How Does It Work?". Spyrosoft. https://spyro-soft.com/blog/geospatial/what-is-geospatial-mapping-and-how-does-it-work.

- ↑ "The Past, Present and Future of Geospatial Mapping". FARO. https://www.faro.com/en/Resource-Library/Article/Past-Present-and-Future-of-Geospatial-Mapping.

- ↑ "10 Key Industries Using Geospatial Applications". SurveyTransfer. https://surveytransfer.net/geospatial-applications/.

- ↑ 55.0 55.1 "AlphaEarth Foundations helps map our planet in unprecedented detail". Google DeepMind. https://deepmind.google/discover/blog/alphaearth-foundations-helps-map-our-planet-in-unprecedented-detail/.

- ↑ "Large Geospatial Model". Niantic Labs. https://nianticlabs.com/news/largegeospatialmodel.

- ↑ 57.0 57.1 57.2 "Get started building apps for visionOS". Apple. https://developer.apple.com/visionos/get-started/.

- ↑ 58.00 58.01 58.02 58.03 58.04 58.05 58.06 58.07 58.08 58.09 "Magic Leap 2 - Spatial Mapping". Magic Leap. https://www.magicleap.com/legal/spatial-mapping-ml2.

- ↑ 59.0 59.1 "v66: Multi-Room Support, Spatial Anchors API Improvements & More for Quest Developers". Meta. https://developers.meta.com/horizon/blog/v66-multi-room-support-spatial-anchors-api-improvements-quest-developers/.

- ↑ "Meta Spatial SDK". Meta. https://developers.meta.com/horizon/develop/spatial-sdk.

- ↑ 61.0 61.1 61.2 "Spaces". Magic Leap. https://developer-docs.magicleap.cloud/docs/guides/features/spaces/.

- ↑ "Evaluation of Microsoft HoloLens 2 as a Tool for Indoor Spatial Mapping". Springer. https://link.springer.com/article/10.1007/s41064-021-00165-8.

- ↑ 63.0 63.1 63.2 63.3 "ARCore Overview". Google Developers. https://developers.google.com/ar.

- ↑ 65.0 65.1 "An Introduction to the Problem of Mapping in Dynamic Environments". ResearchGate. https://www.researchgate.net/publication/221787123_An_Introduction_to_the_Problem_of_Mapping_in_Dynamic_Environments.

- ↑ "Real-time World Sensing". Magic Leap. https://developer-docs.magicleap.cloud/docs/guides/features/spatial-mapping/.

- ↑ "The modifiable areal unit problem in ecological community data". PLOS ONE. https://pmc.ncbi.nlm.nih.gov/articles/PMC7254930/.

- ↑ "The Challenges of Using Maps in Policy-Making". Medium. https://zenn-wong.medium.com/the-challenges-of-using-maps-in-policy-making-510e3fcb8eb3.

- ↑ "What is SLAM? A Beginner to Expert Guide". Kodifly. https://kodifly.com/what-is-slam-a-beginner-to-expert-guide.

- ↑ 70.0 70.1 "What is Spatial Computing? A Survey on the Foundations and State-of-the-Art". arXiv. https://arxiv.org/html/2508.20477v1.

- ↑ "NeRF: Neural Radiance Fields". UC Berkeley. https://www.matthewtancik.com/nerf.

- ↑ "3D Gaussian Splatting for Real-Time Radiance Field Rendering". INRIA. https://repo-sam.inria.fr/fungraph/3d-gaussian-splatting/.

- ↑ 73.0 73.1 73.2 73.3 73.4 "Spatial Mapping: Empowering the Future of AR". Adeia. https://adeia.com/blog/spatial-mapping-empowering-the-future-of-ar.

- ↑ "Spatial Computing Market". MarketsandMarkets. https://www.marketsandmarkets.com/Market-Reports/spatial-computing-market-234982000.html.

- ↑ "Seeing is Believing: VR and AR Enterprise Adoption". PwC. https://www.pwc.com/us/en/tech-effect/emerging-tech/virtual-reality-study.html.